Since I first tested DALL-E, I’ve been on something of a rollercoaster with AI image generation and generative AI in general, but has everything just changed with the new GPT-4o image generation capabilities? Let’s do a deep dive into GPT-4o vs DALL-E 3, Stable Diffusion, and other models to see how they compare.

Learn all about ChatGPT Image Generation using the latest GPT-4o model

Like many, I started experimenting with these tools out of sheer curiosity, playing with early versions, watching them struggle with basic tasks, but being surprised at what’s now possible at the very same time. It’s been exciting, but if I’m honest, mostly disappointing until recently.

For me, the greatest sticking point has always been text in AI images. To begin with, AI couldn’t add words, not even short ones – they were just blurred, jumbled characters and symbols – almost a mishmash of languages and numbers together. Then we started to see single numbers and characters showing correctly, then very short words, like “AI”, “The”, etc.

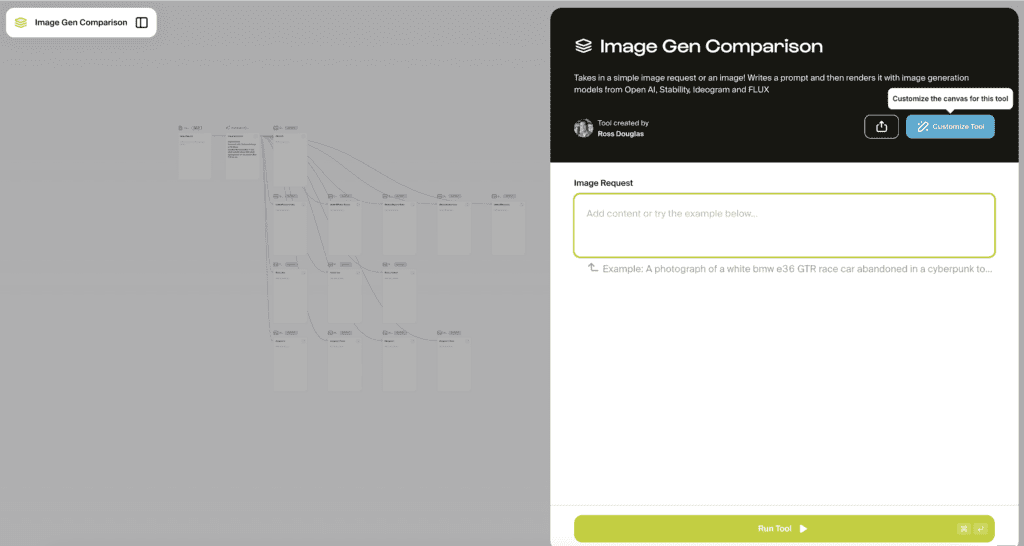

I noticed things improve very recently when using https://hunch.tools (I highly recommend trying this for experimentation with AI).

One of the Hunch tools is excellent as it allows you to compare how different AI models compare at generating results in parallel. When testing the latest models like DALL-E 3, FLUX.1 Pro, Stable Diffusion 3, Ideogram 2, and Google Imagen 3, I noticed they were getting better at text. Now, instead of short words, some of them were able to add labels and headings correctly. I would stress that this was only for around 3-5 words max before they started providing the same old gibberish. Still, it seemed like a leap.

The hunch.tools AI image generation comparison tool

The short video below shows an example of using the various image generation models in Hunch to compare the results.

I know for other people, the struggles around AI image generation have been around faces and hands, reflections, shadows, or being able to provide photorealistic images. I guess it really depends on your use case or requirement for image content, but I think most of us who have experimented with AI images at some stage would struggle to be proud of the output or happy to use the image content in any serious setting.

Based on OpenAI Image Generation announcement below and my experimentation today, this is about to change:

https://openai.com/index/introducing-4o-image-generation/

And here:

What I’ve discovered through extensive testing is that GPT-4o represents not just another small incremental improvement in AI image generation, but a fundamental change in how these systems work and what they can achieve. The integration of image generation directly into the language model itself (rather than as a separate bolt-on component) has solved some of the issues that made other tools interesting but frustrating to work with.

In this guide, I’ll share what I’ve learned through my hands-on experimentation with the GPT-4o image generation capabilities, comparing it to other leading tools, testing it against common challenges, and exploring whether it’s genuinely practical for everyday professional use. Whether you’re a designer, marketer, educator, or just curious about the latest advancements in AI, I hope my experiences will help you understand what’s possible and the challenges that still remain.

Key Takeaways:

- GPT-4o represents a fundamental change in AI image generation, integrating it directly into a multimodal language model rather than as a separate component

- The model successfully addresses many longstanding challenges in AI image generation, including text rendering, anatomical accuracy, and realistic reflections

- The conversational, iterative approach to image creation transforms the creative process, making it more accessible to users without needing specialist knowledge

- GPT-4o democratises access to high-quality visual content creation, empowering users regardless of their technical expertise or design background

- While generation speed remains a limitation, the quality improvements generally justify the wait for most applications

- The technology continues to evolve rapidly, with ongoing improvements in speed, quality, and capability expected almost daily

- 1 What Makes GPT-4o’s Image Generation Different: The Omnimodel Approach

- 2 Solving the Big Three: Text, Hands, and Reflections

- 3 Technical Capabilities: Is GPT-4o Practical for Everyday Use?

- 4 How is GPT-4o Image Generation Different to DALL-E Image Generation?

- 5 Detailed Comparisons: GPT-4o vs. Other AI Image Generators

- 6 Key Takeaways and Future Outlook

- 7 Practical Test Cases: What I’ve Tried and What I’ve Learned

- 8 Final Thoughts on ChatGPT Image Generation

What Makes GPT-4o’s Image Generation Different: The Omnimodel Approach

Before diving into specific capabilities, it’s important to understand what makes GPT-4o fundamentally different from other AI image generators like DALL-E 3 or Midjourney. The key lies in what OpenAI researchers call the “omnimodel” approach.

Unlike previous systems where a language model would interpret your text prompt and then hand it off to a separate image generator, GPT-4o integrates these capabilities natively. As I’ve interacted with it, I’ve noticed this isn’t just a technical detail, it totally transforms the experience.

The difference became apparent in my first conversations with GPT-4o. Rather than carefully crafting the perfect prompt and hoping for the best (as I’d become accustomed to with other tools or models like DALL-E), I found myself simply describing what I wanted in natural language. When the results weren’t quite right, I could refine them through normal conversation to produce a new version or iteration, much as I would when working with a human designer.

With simple feedback prompts, like “Redo in style [xyz]”, “Remove the background”, or “Add more detail”, the model understood and implemented these changes while maintaining all the other elements of the image that I liked. This conversational, iterative approach feels significantly more natural and efficient than the one-shot process of traditional image generators.

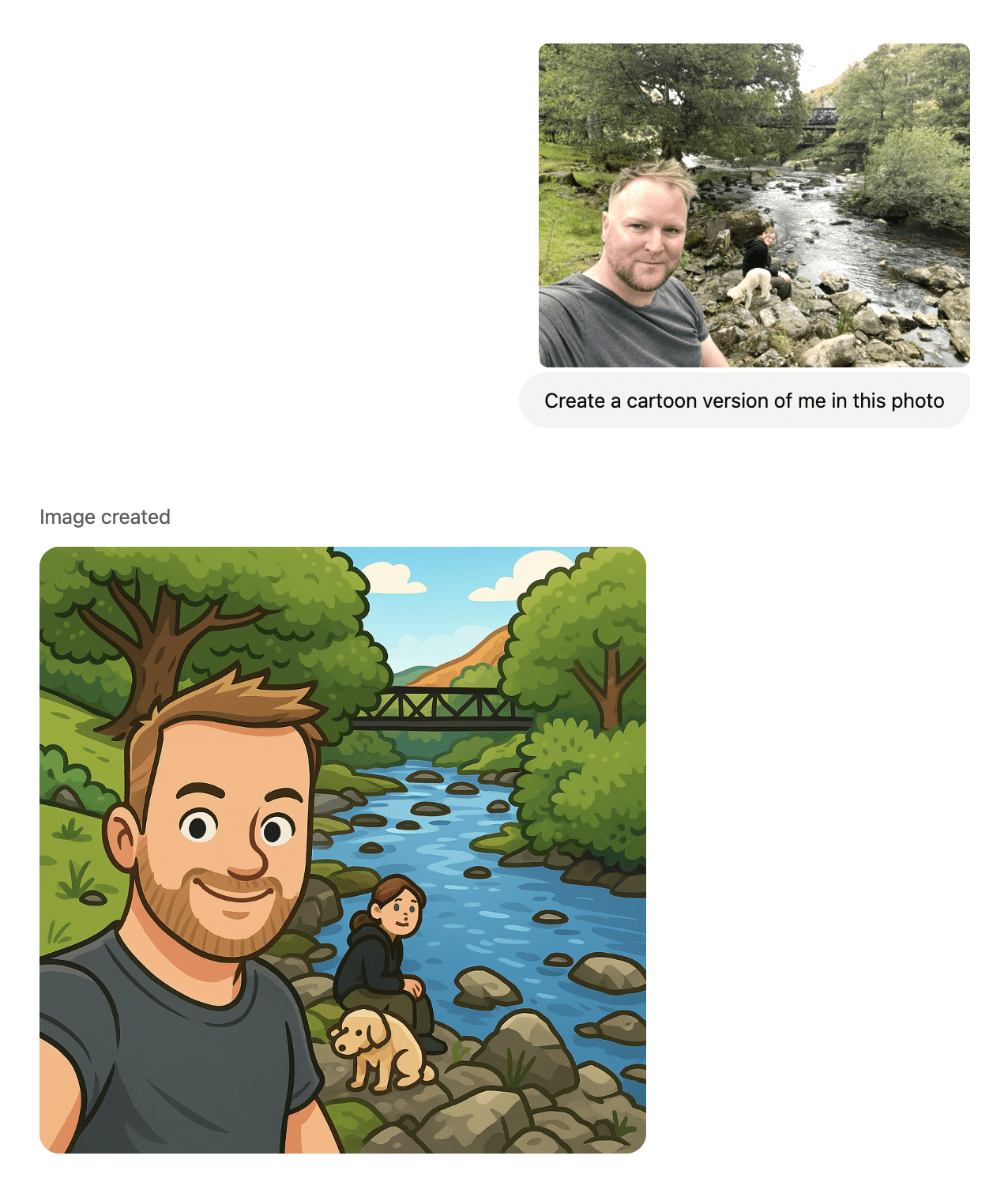

Here’s a photo of me enjoying a little holiday in Wales with the family last Summer, just look at how accurately it creates a new cartoon style.

ChatGPT image generation – convert my photo to a cartoon

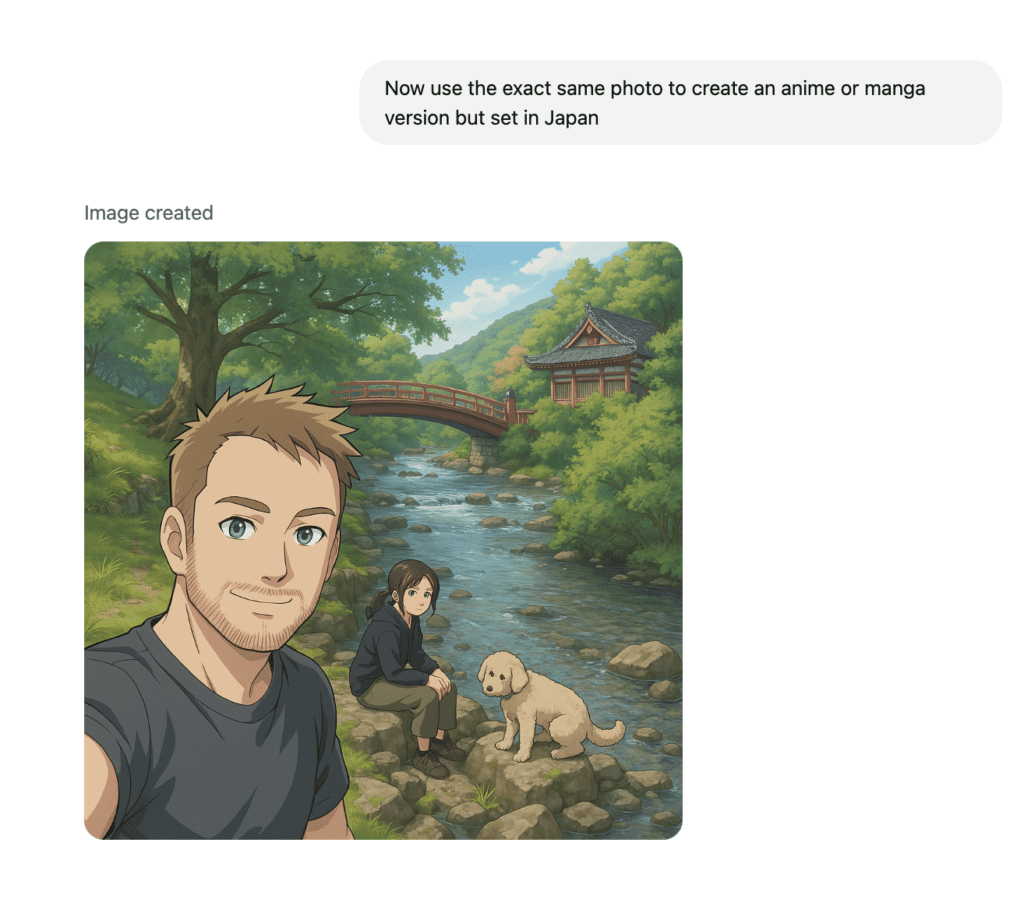

To really push the boundaries of GPT-4o, I then asked for an anime version set in Japan.

GPT-4o converting one ChatGPT image style to another with a slightly different setting.

The omnimodel approach also means GPT-4o can leverage its understanding of language, context, and the world when generating images. It can incorporate information from our previous conversation turns, understand complex instructions that reference multiple concepts, and generate images that reflect a deeper understanding of what I’m asking for.

This integration across modalities represents a significant departure from the compartmentalised approach of previous AI systems and points toward a future where AI can engage with the world in ways that more closely resemble human thinking and behaviour.

Solving the Big Three: Text, Hands, and Reflections

If you’ve used AI image generators before, you’ll be familiar with their most notorious limitations.

For years, these tools have struggled with three simple but seemingly challenging aspects of image creation: rendering text accurately, drawing anatomically correct hands, and creating realistic reflections or transparent objects.

These aren’t minor issues, they’re fundamental limitations that have prevented AI image generators from being useful for many practical and professional applications.

So naturally, these were the first things I tested when exploring GPT-4o’s capabilities.

Text Rendering: Can GPT-4o Finally Add text in AI images?

The inability to render clean, accurate text has been the most frustrating limitation of previous AI image generators for me.

As someone who occasionally needs to create infographics, marketing materials, featured images for blog posts, and educational content, I’ve been repeatedly disappointed by garbled text, spelling errors, and formatting issues in AI-generated images.

The example below is one I just carried out with DALL-E 3 by requesting a poster specifically containing ~200 words I supplied to promote AI. As you can see, some of the text looks familiar, but not a single word or phrase is actually correct.

text in AI images issue – example using DALL-E 3

My tests with GPT-4o revealed a significant breakthrough in this area, more so than my tests only a week back with the latest models.

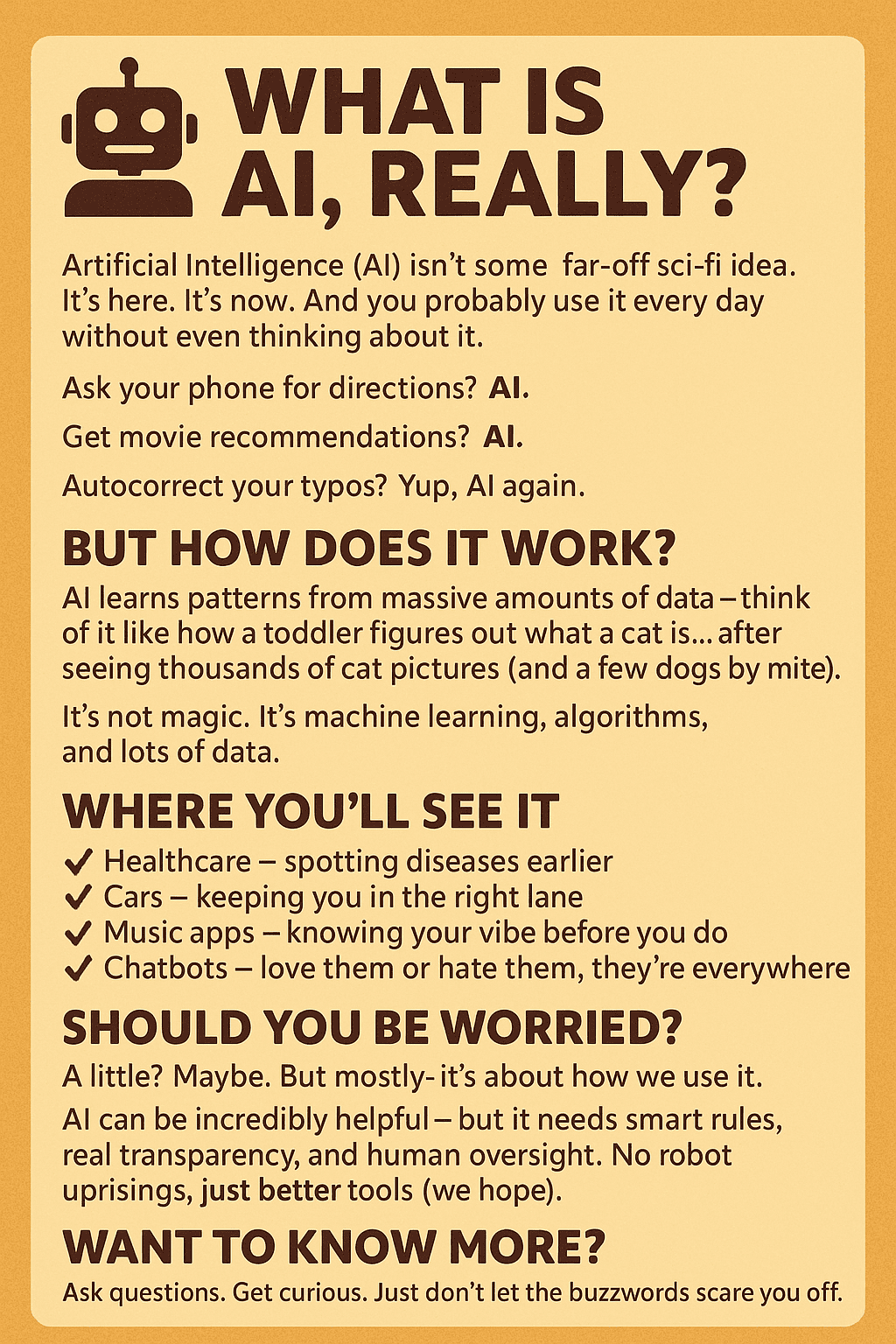

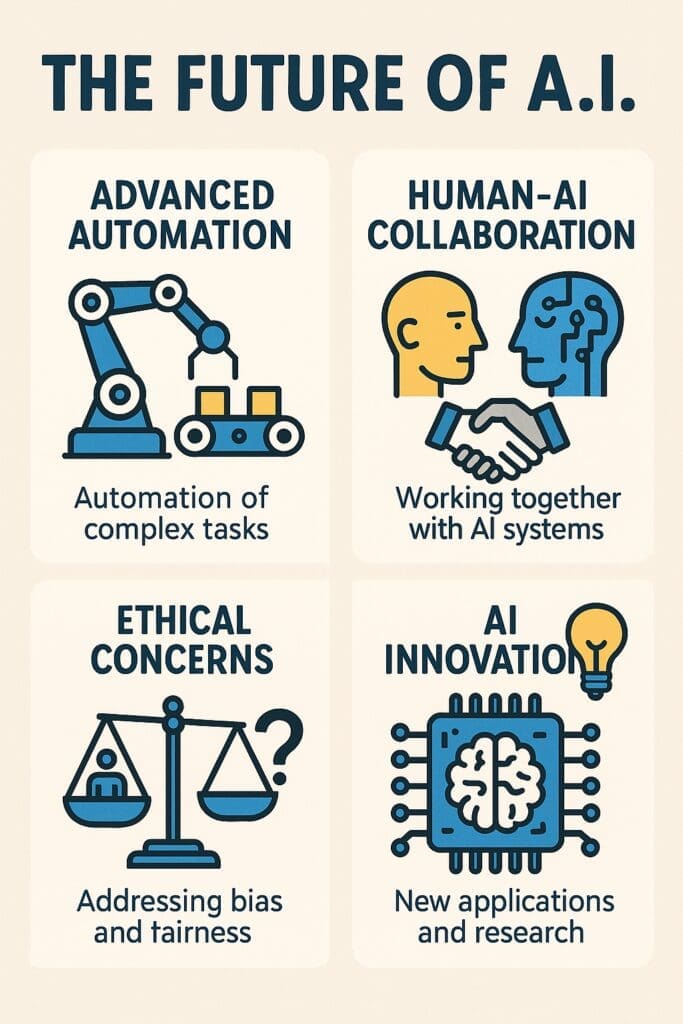

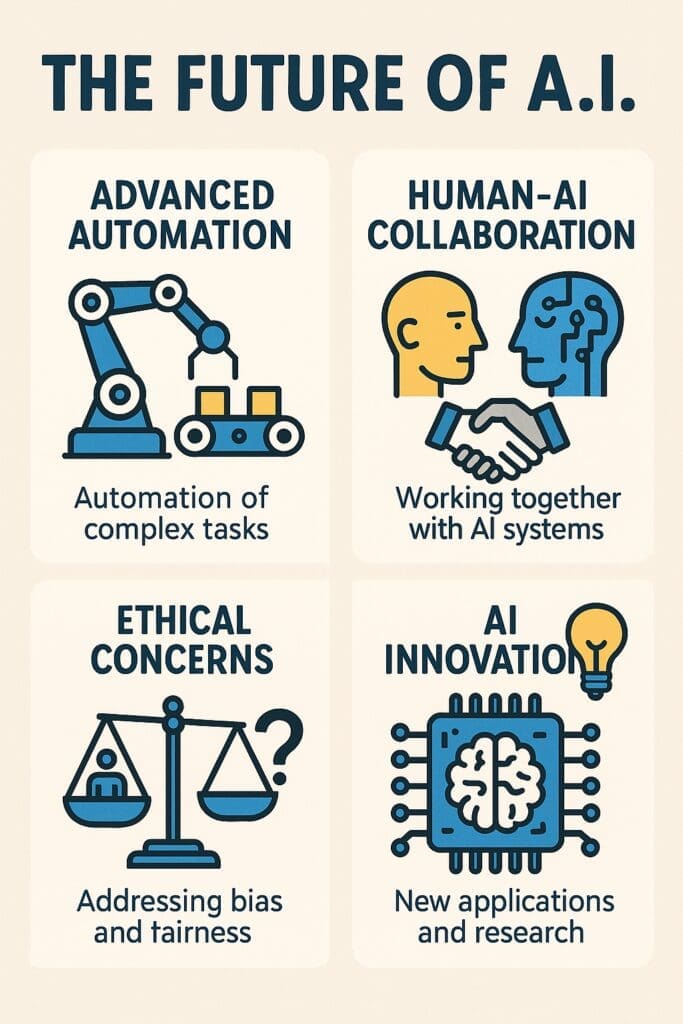

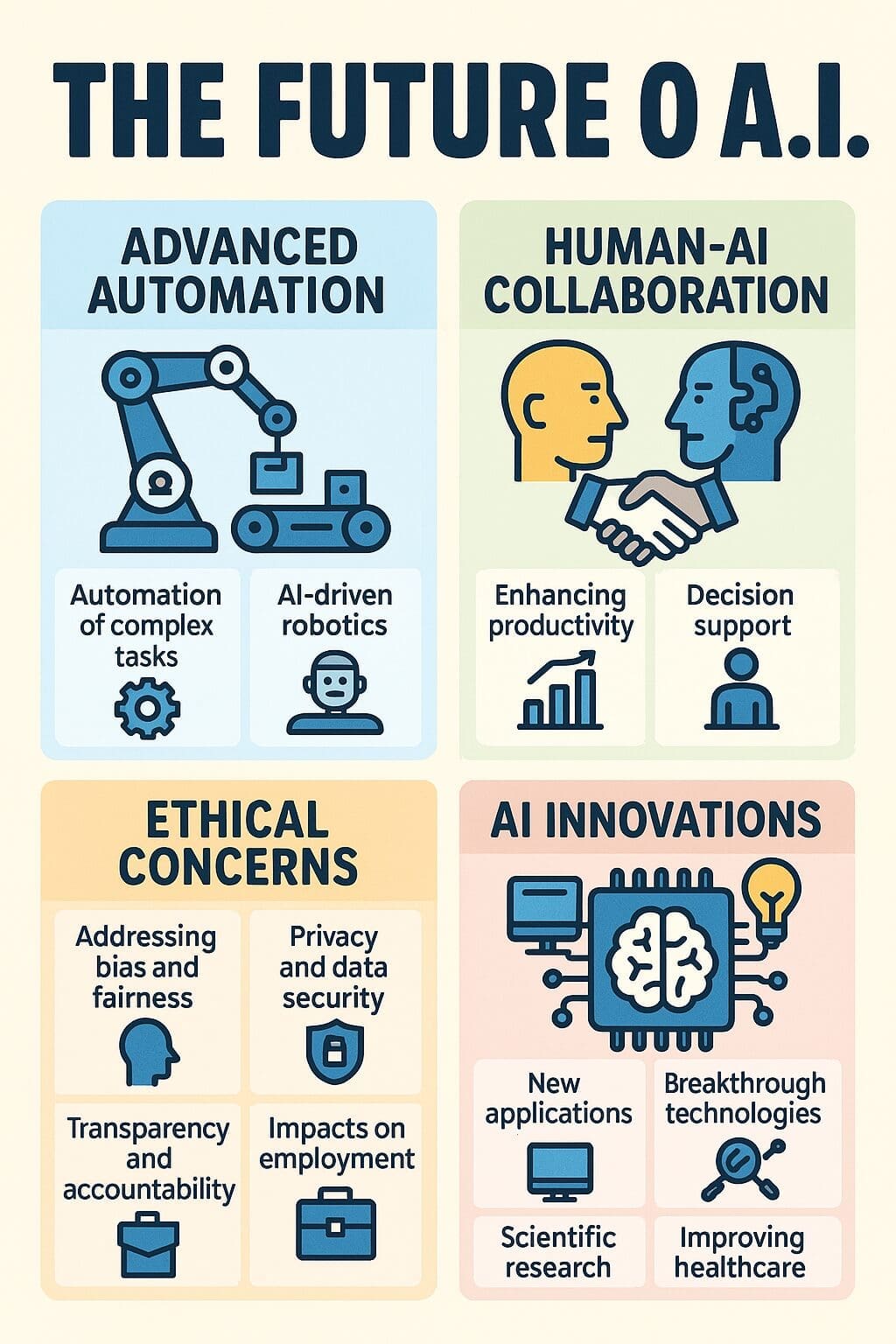

When I asked it to create an image of a short infographic about artificial intelligence, I was genuinely surprised by the result. The text was perfectly legible, properly formatted, and free of spelling errors or jumbled words that plagued other models.

This wasn’t a fluke. I pushed further, requesting another example, then different typography, and text wrapped around curved surfaces. In each case, GPT-4o maintained remarkably high quality. It’s still not perfect. Particularly with very small text, complex requests, or long layouts, but the improvement over previous models is dramatic.

Using the poster example above, the example below shows that GPT-4o gets this spot on. As far as I can tell, every sentence, word and character is exactly as I requested.

text in AI images issue – resolved in GPT-4o

The practical implications are substantial. I can now use GPT-4o to create infographics, social media banners with text overlays, product mockups with descriptions, and other text-heavy visual content without worrying about embarrassing errors.

Hands and Anatomy: No More Extra Fingers

The rendering of human hands and fingers has been another persistent challenge for AI image generators, so common that viral memes have gone around the web and unusual hand configurations have become a widely recognised indicator of AI-generated content.

AI-generated images – fingers and hands. Source buzzfeednews – “Human hands” generated by Stable Diffusion (left) where the same issues are also reported with Midjourney and DALLE-2

It’s not just hands though. There has always been something off about how AI images handle human anatomy. For example:

- Facial features look correct at a quick glance but then appear to be something from a horror story when you look closer with things not quite in the right place.

- Extra fingers or toes, or perhaps even an arm that somehow gets shared between one person and another.

- A body pointing in one direction as the face goes in an impossible direction.

In my testing, GPT-4o demonstrated significantly improved handling of hands and fingers. When I asked it to generate images of hands in different poses – pointing, grasping, waving, and making a peace sign, the results were consistently accurate.

This improvement extends beyond just hands to overall anatomical accuracy. Human figures have more realistic proportions, facial features are better aligned, and complex poses are more naturally rendered. When I asked for different photo images of people, all of the faces looked realistic when zoomed in.

I don’t often need realistic human figures for the graphics I create, but this advancement removes this as a barrier to others using AI-generated imagery in professional contexts.

Reflections and Transparency: Physics That Makes Sense

Reflections, transparency, and other complex visual phenomena have challenged AI image generators since their inception. Previous models struggled with inconsistent reflections, artificial-looking glass, and physically impossible light behaviour.

My tests with GPT-4o revealed significant improvements in this area as well. When I asked it to generate an image of a person standing in front of a large mirror, the reflection actually matched the subject. Water reflections showed appropriate distortion, transparent materials like glass demonstrated realistic light refraction, and complex scenes with multiple reflective elements maintained physical plausibility.

In the below example, I asked for a photorealistic image of somebody standing by a window with a big puddle below their feet to see whether the reflections would show correctly in the puddle and window. I won’t show what the various models produced, but none of them managed to show a reflection in both the window and the puddle. Google’s Imagen 3 and Stable Diffusion 3 looked accurate, but only showed puddles.

The best attempt at showing reflections in both came from GPT-4o, but it’s still flawed. At a glance, everything looks fine, but you quickly see that the reflection in the window isn’t right – it even looks like a different person. Then you notice the reflection of the reflection in the puddle, which sadly reminds me of the 6 fingers issue.

GPT-4o image reflection example

Again, it’s not perfect, particularly with very complex arrangements of reflective and transparent surfaces, but the improvement over previous models is remarkable. For my work creating product visualisations and realistic environments, this advancement makes GPT-4o significantly more useful than previous tools.

Technical Capabilities: Is GPT-4o Practical for Everyday Use?

Technical capabilities are important, but they only matter if they translate into practical utility for real-world tasks. As someone who occasionally needs to create visual content but isn’t a professional designer, I was particularly interested in whether GPT-4o would be accessible and useful for everyday creative tasks.

The Conversation Factor: Natural Interaction Changes Everything

The most striking aspect of working with GPT-4o’s image generation isn’t any single technical capability but rather the conversational nature of the interaction. Rather than treating image generation as a one-shot process where a text prompt goes in and an image comes out, GPT-4o enables an iterative approach to image creation.

I can provide feedback, request edits, and refine my images through natural dialogue, much as I would when working with a graphic designer. This transforms the creative process from a technical challenge into a collaborative conversation.

For example, I might start with a rough concept, “Create an image of a modern office space with natural light”. When I see the result, I can refine it based on what I like and don’t like, “Make the space feel more open and add some plants”. GPT-4o understands and implements these changes while maintaining the elements I didn’t ask to change.

I don’t need to learn or master specialised prompt engineering techniques or parameter settings for images, I can simply describe what I want in plain language and iteratively refine the result until it matches my vision.

As somebody who uses tools like Adobe PhotoShop, Canva, and Adobe Creative Cloud, I know these tools all come with nice AI image editing features, but until now, I wouldn’t have considered ChatGPT image creation suitable for editing.

Editing Capabilities: Transformation and Refinement

The editing capabilities of GPT-4o extend beyond simple adjustments to include complex changes. I was particularly impressed by its ability to take an existing image and transform it into a completely different style while maintaining recognisable features and details. For example, “Create a cartoon version of my selfie”, or “Create a greyscale version of my image”.

This kind of transformation, which combines understanding of the input image with creative generation of a new image, represents a level of sophistication that I haven’t seen in previous models.

It’s not just limited to ChatGPT-generated images either, you can upload your own graphics, visuals or photography, and ask for edits. My work often involves adapting and customising visual assets, so this flexibility is incredibly valuable.

Democratising Design: Professional-Quality Results Without Professional Skills

Perhaps the most significant aspect of GPT-4o for many people will be its ability to democratise access to professional-quality visual content creation.

As someone with limited design skills, I’ve often found myself constrained by what I could create with basic tools or limited by the cost of professional design services. Failing that, I would look at free/royalty-free stock photography sites like Pexels or Pixabay or consider premium stock photography sites like iStock or Shutter Stock.

GPT-4o changes that equation. I can now create high-quality image content and visual assets without mastering complex design tools, hiring external help, paying for images, or worrying about copyright concerns (for now anyway).

The conversational interface makes the process accessible regardless of technical expertise, and the quality of the output is high enough for many professional applications.

This democratisation has practical implications across numerous fields:

- Small business owners can create marketing materials without professional graphic design or photography services

- Educators can produce engaging visual learning resources without graphic design backgrounds

- Students can illustrate their ideas with professional-quality visuals

- Content creators can generate consistent visual assets without mastering complex design tools

For me, this accessibility represents the most transformative aspect of GPT-4o’s image generation capabilities. It’s not just about what it can create, it’s about who can create with it.

How is GPT-4o Image Generation Different to DALL-E Image Generation?

The first question that came to mind about GPT-4o image generation was that surely this is just the next version of DALL-E (i.e. DALL-E 4)?

The answer is emphatically no. It represents an entirely different approach to AI image creation.

A Completely New Integrated Architecture, Not DALL-E 4

Despite both being created by OpenAI, GPT-4o’s image generation is not DALL-E 4 or even an iteration of the DALL-E family.

Instead, it’s built on what OpenAI researchers called the “omnimodel” approach mentioned above, which is a fundamental architectural shift in how AI generates images.

The DALL-E models (from the original through DALL-E 3) were separate, specialised systems designed specifically for image generation. They operated as distinct models that connected to language models through an interface. When you used DALL-E through ChatGPT, you were essentially using two different AI systems working in sequence, one to understand your request and another to generate the image.

GPT-4o integrates image generation as a native capability within the large language model itself. As I learned from OpenAI’s announcement, this project began about two years ago as

“a scientific question about what native support for image generation would look like in a model as powerful as GPT-4.”

The result is an AI that doesn’t just connect to an image generator, it is the image generator.

From Modular to Integrated Understanding

This architectural difference creates significant implications for how the system works:

DALL-E models receive a text prompt (sometimes refined by ChatGPT), interpret that prompt, and then generate a corresponding image. It’s a linear, one-directional process where the language understanding and image generation are separate steps.

GPT-4o, on the other hand, understands language, images, and their relationships as part of the same cognitive process. There’s no handoff between systems, i.e. the same model that interprets your request generates the image. This integrated understanding enables GPT-4o to:

- Maintain conversation context across multiple turns

- Understand subtle refinements and adjustments

- Remember previous images and modifications

- Apply its broad knowledge base directly to image generation

- Create images that more accurately reflect complex instructions

In my testing, this integration created a much more intuitive creative process. Rather than writing the perfect prompt to get what I want in one shot (as with DALL-E), I can have a natural conversation about the image I’m trying to create, refining it through dialogue until it matches my vision.

Even the featured image for this guide was created by GPT-4o. In this test, I threw the whole article (13 pages at nearly 5,000 words at the time of writing) at ChatGPT and just asked for a featured image.

Detailed Comparisons: GPT-4o vs. Other AI Image Generators

While my primary focus has been exploring GPT-4o’s capabilities, I’ve also been curious about how it compares to other leading AI image generators.

Here’s what I’ve found through my testing and comparisons.

For each one, I’ve used the same two test cases so you can easily compare the results:

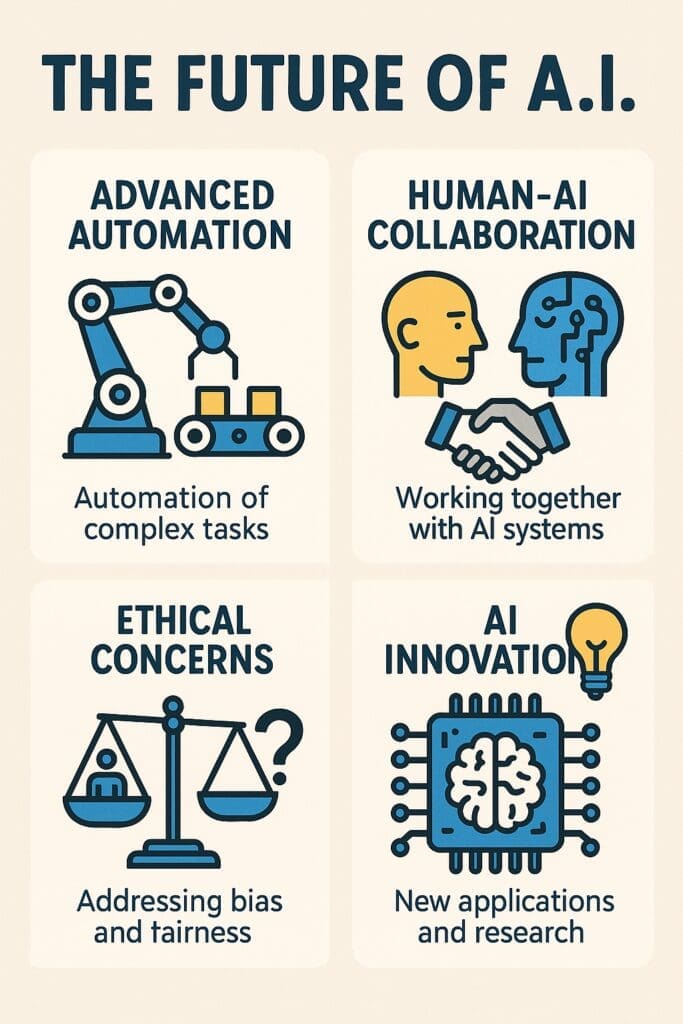

- Prompt 1: Create an infographic with 4 sections to illustrate the future of AI. Show key themes using icons, text and visuals.

- Prompt 2: Create a high-resolution, super realistic photo of a business person covering their face with both hands in shame.

GPT-4o vs. DALL-E 3

DALL-E 3 represented a significant improvement over its predecessors, but GPT-4o surpasses it in several key areas:

- Text rendering: While DALL-E 3 improved on earlier versions, it still struggles with longer passages and complex formatting. GPT-4o maintains accurate text rendering even for paragraphs and complex layouts.

- Anatomical accuracy: DALL-E 3 produces noticeable errors, particularly with hands and complex poses. GPT-4o consistently generates anatomically correct figures with proper proportions and natural positioning.

- Conversation and context: DALL-E 3 is integrated with ChatGPT but lacks the deep multimodal integration of GPT-4o. This limits its ability to incorporate conversation context and engage in natural refinement.

- Editing capabilities: DALL-E 3 offers basic editing but lacks the sophisticated transformation capabilities of GPT-4o, particularly for complex edits that require understanding the content and context of the original image.

The most significant difference, however, is the conversational, iterative workflow that GPT-4o enables. While DALL-E 3 improved image quality, GPT-4o transforms the entire creative process.

GPT-4o vs DALL-E 3 infographic example (text in AI images) |

DALL-E 3 infographic example |

The DALL-E version is overly complicated and full of AI text issues. GPT-4o is basic but follows my prompt exactly. Apart from the “fairness” word where the “f” looks more like a “t”, the AI text in the image is perfect.

GPT-4o vs DALL-E 3 (realistic photo example) |

DALL-E 3 realistic photo example |

The DALL-E version is overly is probably the worst of any model. Yes, we have five fingers, but it shows two people rather than the one “person” requested, and the overall style and shading make them seem digitised rather than a realistic photo. The 4o image, on the other hand, is perfect in every way – it’s photo realistic and everything is in proportion. It’s actually the best of the bunch, but keep reading.

GPT-4o vs. Google Imagen

Google’s Imagen has been advancing rapidly, particularly in its integration with Google’s ecosystem. Compared to GPT-4o:

- Photorealism: Imagen excels at photorealistic rendering, with generally good results in standard scenarios. GPT-4o matches this quality while offering more flexible interaction.

- Integration: Imagen benefits from integration with Google’s broader ecosystem but lacks the deeply conversational interface of GPT-4o. This creates a more structured, less natural interaction model.

- Anatomical accuracy: Imagen has made significant progress but still encounters difficulties with hands, complex poses, and unusual perspectives. GPT-4o demonstrates more consistent anatomical accuracy.

- Conversation and context: Imagen has limited ability to incorporate broader conversation context or engage in conversational refinement compared to GPT-4o’s deeply integrated approach.

For users already invested in Google’s ecosystem, Imagen offers advantages through integration. For those prioritising natural conversation and flexible editing, GPT-4o provides a more intuitive experience.

GPT-4o vs Google Imagen infographic example (text in AI images) |

Google Imagen 3 infographic example |

The Google Images version looks more attractive but is splattered with text issues.

GPT-4o vs Google Imagen (realistic photo example) |

Google imagen 3 realistic photo example |

This one is a close call. It might be the lighting, but Google’s Imagen 3 version looks slightly less realistic. Otherwise, it’s very good, showing just one person, in a photographic style and with both hands in proportion with 5 fingers.

GPT-4o vs. Stable Diffusion

As an open-source model, Stable Diffusion offers maximum flexibility but with different trade-offs:

- Customisability: Stable Diffusion allows for extensive customisation and fine-tuning, making it powerful for technical users with specific requirements. GPT-4o offers less customisation but greater accessibility.

- Resource requirements: Running Stable Diffusion locally requires hardware resources and technical knowledge. GPT-4o runs in the cloud, requiring no local resources but with potential usage limits.

- Text rendering: Base Stable Diffusion models struggle with text rendering, though community models have improved this capability. GPT-4o offers superior text handling without requiring specialised models.

- Interface: Stable Diffusion interfaces vary widely, from command-line tools to web-based GUIs. None offer the deeply conversational experience of GPT-4o.

- Creative freedom: As open-source software, Stable Diffusion has fewer built-in restrictions, placing more responsibility on users regarding content policies. GPT-4o balances creative freedom with appropriate safety guardrails.

For technical users who prioritise customisation and control, Stable Diffusion remains valuable. For those seeking an accessible, conversational image creation experience, GPT-4o offers significant advantages.

GPT-4o vs Stable Diffusion infographic example (text in AI images) |

Stable Diffusion 3 infographic example |

Much like the DALL-E 3 version, this is overly complex, with all text other than the title “The Future of AI” being totally illegible.

GPT-4o vs Stable Diffusion (realistic photo example) |

Stable Diffusion 3 realistic photo example |

The Stable Diffusion one isn’t terrible at a first glance, but it still feels a bit digitised and look closely at the proportions of the hands to face. It looks more like somebody else’s arms and hands. If you look even closer, you’ll see the problem with fingers on both hands, but the left hand is notably worse, showing six fingers, almost like two hands have been merged into one mutant hand.

Stable Diffusion image – 6 fingers and a strange overlapping effect – two hands merged together or one?

GPT-4o vs. Midjourney

Midjourney has established itself as a leader in generating aesthetically pleasing, artistic images. Compared to GPT-4o, here’s what I’ve found:

- Artistic quality: Midjourney still excels at creating visually striking, artistic images with distinctive aesthetic qualities. For purely artistic applications, it remains a strong contender.

- Text rendering: Midjourney continues to struggle with text rendering, particularly for longer passages or precise formatting. GPT-4o’s superior text handling makes it more suitable for content requiring accurate text elements.

- Interface and accessibility: Midjourney’s Discord-based interface requires learning specific command syntax rather than interacting through natural conversation. GPT-4o’s conversational approach is more accessible to non-technical users.

- Editing workflow: Midjourney provides powerful variation and parameter-based editing capabilities, but through a more technical interface. GPT-4o’s natural language editing is more intuitive for most users.

- Availability: Midjourney requires a paid subscription for all usage, while GPT-4o is available to all ChatGPT users, including those on the free tier (with usage limits).

Sadly, I’ve not had a chance to test Midjourney yet, but I will come back to this.

For artistic experimentation and stylised imagery, Midjourney remains excellent. For practical, professional applications. particularly those requiring text elements or natural conversation, GPT-4o offers significant advantages.

GPT-4o Image Editing

I wasn’t totally happy with the simplicity of the GPT-4o infographic, so I gave it the following editing prompt.

Editing prompt: You said: Make my above infographic more visually engaging, add stronger typography for the title so it jumps out, add different pastel colours to each section or section heading background. Within each of the 4 boxes, add 4 sub-sections/boxes to elaborate on each and provide more detail.

GPT-4o image editing infographic example

Again, this isn’t perfect as it’s not showing four boxes inside each of the original four boxes, but it’s very close and has applied the colours as requested, along with vastly more text than any other model can generate, and all spelt correctly.

Key Takeaways and Future Outlook

After three years of experimenting with AI and ChatGPT image generation, from early tools that felt more like interesting toys to the sophisticated systems available today, I’ve developed a perspective on where we are and where we’re heading.

Here are my key takeaways from exploring the capabilities of GPT-4o image generation:

1. Integration Changes Everything

The native integration of image generation into a multimodal AI system fundamentally transforms both the quality of the output and the nature of the creative process. Rather than treating image generation as a separate task, GPT-4o approaches it as another form of communication, as natural as writing text or speaking. This is exactly the experience we’ve all come to know and love with ChatGPT for text-related tasks.

This integration enables a conversational, iterative approach to image creation that feels collaborative rather than technical. It’s not just about better images, it’s about a better way of creating them.

2. Technical Barriers Are Falling

The most significant technical limitations that have plagued AI image generators, like text rendering, anatomical accuracy, reflections, and transparency, are being overcome. While not perfect, GPT-4o makes substantial progress in addressing these challenges, expanding the range of images that can be reliably generated.

These improvements transform AI image generation from an interesting curiosity into a practical tool for everyday creative tasks. As these technical barriers continue to fall, the utility and adoption of these tools will only increase.

3. Democratisation of Visual Creation

Perhaps the most profound impact of GPT-4o is its democratisation of visual content creation. By making sophisticated image generation accessible through natural conversation, it empowers users regardless of their technical expertise or design background.

This democratisation has far-reaching implications for industries that have traditionally relied on specialised skills and expensive software. Small businesses, educators, students, and content creators can now produce professional-quality visual content without specialised training or resources.

4. The Future Is Conversational

The most significant shift I’ve observed is the move toward conversational creation. Rather than detailing lengthy prompts or learning specialist techniques, users can express their ideas naturally and refine them through dialogue. This conversational approach makes the technology accessible to a much broader audience and enables a more natural creative process.

As AI image generation continues to evolve, I expect this conversational aspect to become even more sophisticated, with models developing a better understanding of user intent, maintaining longer creative contexts, and offering more nuanced guidance throughout the creative process.

5. Speed Remains a Challenge

One area where GPT-4o (like all AI image generation models) still lags is speed. As acknowledged during OpenAI’s announcement,

“Images are much slower than our previous image generation thing, but like unbelievably better. We think it’s super super worth the wait.”

In my experience, this trade-off between quality and speed is generally worthwhile. I’d rather wait a bit longer for an image that accurately captures my vision than receive a flawed result quickly that I abandon or forever try to perfect. However, for workflows requiring rapid iteration or high volume, the current generation speed could be limiting.

OpenAI has indicated that speed improvements will come over time, suggesting ongoing optimisation efforts. As these improvements materialise, the practical utility of GPT-4o for time-sensitive applications will only increase.

Practical Test Cases: What I’ve Tried and What I’ve Learned

Throughout my exploration using ChatGPT image generation with GPT-4o today, I’ve tested it across a wide range of practical scenarios. These tests have given me a clearer understanding of its strengths, limitations, and suitability for specific applications.

Educational Materials

As someone who occasionally creates educational content, I was particularly interested in GPT-4o’s ability to generate informative visuals. I tested it by requesting educational infographics with labelled diagrams and explanatory text, a task that requires both visual clarity and accurate text rendering.

The results were impressive. GPT-4o consistently produced clean, informative infographics with properly formatted text and clear visual elements. The ability to refine these images through conversation made the process remarkably efficient.

This capability is transformative for educators without graphic design backgrounds. Creating custom visual learning resources no longer requires specialised software or skills, just a clear idea and the ability to communicate it conversationally.

Marketing Assets

I also tested GPT-4o’s suitability for creating marketing materials, including product advertisements, social media graphics, and promotional imagery. These tests focused on brand consistency, visual appeal, and effective communication of key messages.

While not quite matching the work of professional graphic designers, GPT-4o produced marketing assets that were more than adequate for many professional or business purposes. The ability to include accurate text, maintain consistent branding elements, and create visually appealing layouts makes it a valuable tool for small businesses and content creators without dedicated design resources.

The conversational refinement process was particularly valuable for marketing applications, allowing for rapid iteration based on feedback.

Technical Illustrations

Technical illustrations present unique challenges, requiring accuracy, clarity, and precision. I tested GPT-4o by requesting diagrams of various mechanical systems, architectural views, and technical concepts.

The results were mixed but promising. For relatively common technical subjects, GPT-4o produced accurate, clear illustrations with proper labelling and proportions. For complex technical concepts, the results were less consistent, occasionally missing key details or misrepresenting technical elements.

This suggests that while GPT-4o represents a significant advancement for general-purpose technical illustration, specialist domains may still benefit from purpose-built tools or human expertise.

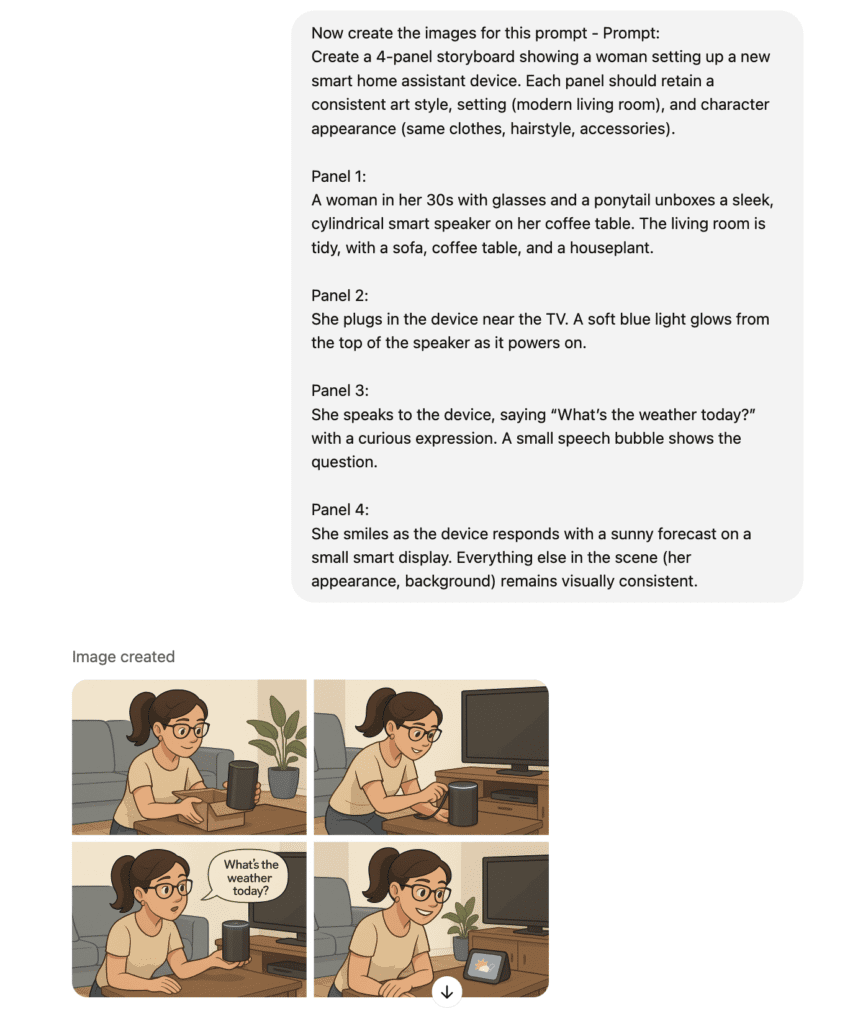

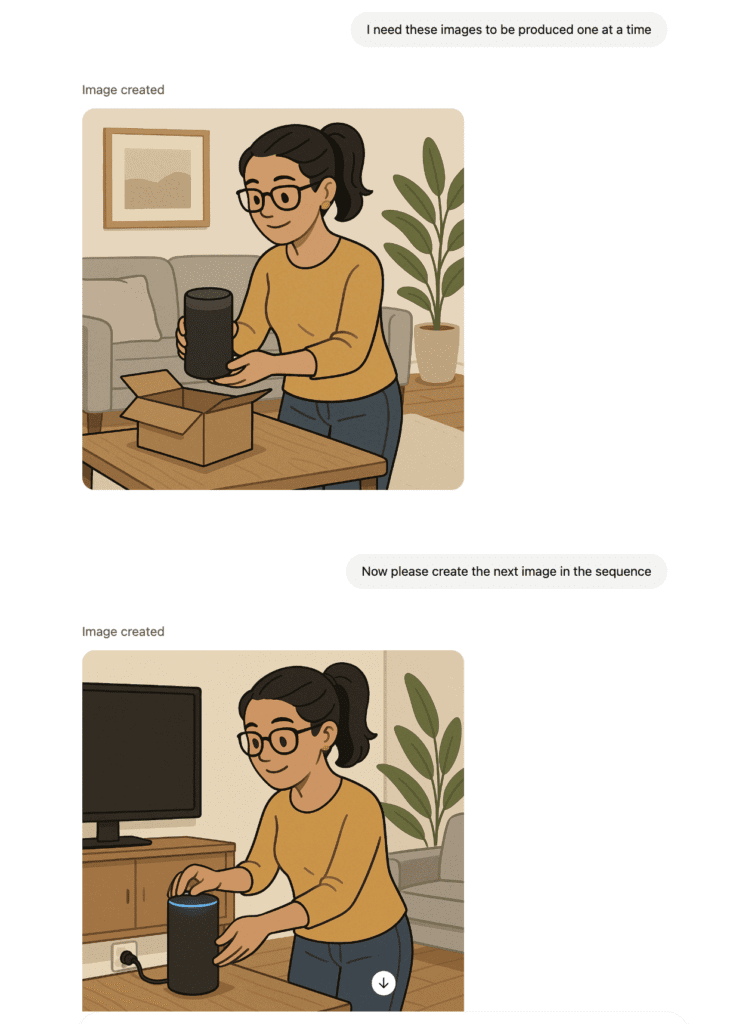

Storyboarding and Sequential Imagery

The ability to maintain consistency across multiple related images is crucial for storyboarding, sequential illustrations, and narrative content. I tested this by requesting a series of images depicting simple narratives or processes, evaluating how well GPT-4o maintained the general appearance, details, and stylistic consistency.

The first example below shows my attempt at a storyboard. In this instance, it produced four scenes within a single image.

GPT-4o – my first go at image storyboarding

In the next attempt, I wanted to see how well it could maintain the correct likeness across sequential prompts to produce one image at a time.

GPT-4o – sequential image storyboarding example with consecutive prompts

GPT-4o performed surprisingly well in this area. It maintained consistent character features, environmental details, and stylistic elements across multiple images, enabling the creation of coherent visual narratives. The conversational interface made it easy to reference earlier images and ensure continuity throughout the sequence.

This capability opens up new possibilities for visual storytelling, instructional sequences, and process documentation.

Final Thoughts on ChatGPT Image Generation

When I first began experimenting with AI image generation a few years ago, it felt like an interesting novelty, a glimpse of future possibilities rather than a practical tool for everyday use.

The images produced were often impressive at first glance but riddled with flaws that limited their utility for serious work. Text was garbled, hands had too many fingers, reflections defied the laws of physics, and creating exactly what you wanted required special knowledge and endless iterations.

GPT-4o represents a fundamental change. By integrating image generation and editing directly into a powerful language model, it transforms AI image creation from a technical challenge into a natural conversation. The improvements in text rendering, anatomical accuracy, and physical realism expand the range of images that can be reliably generated. The conversational, iterative approach makes the technology accessible to users regardless of technical expertise.

For me, this transformation has changed how I think about visual content creation. What once required special skills, expensive software, or external services can now be accomplished through natural conversation with an AI assistant. This democratisation of visual creation has profound implications for how we communicate, educate, market, and express ourselves visually.

Is GPT-4o perfect? Certainly not. Generation speed remains a limitation, and there are still subjects and scenarios where human artists and designers provide superior results. There are also restrictions due to the complexity of image prompts, with longer images being clipped. However, as the technology continues to evolve, I expect each update will bring new capabilities and improvements.

My main conclusion is that AI image generation has now transitioned from novelty to utility. From an interesting experiment to a practical tool for everyday creative tasks, we can now rely on ChatGPT image generation for a range of professional applications.

For anyone who has been watching the evolution of AI with a mix of curiosity and scepticism, as I have for the past three years, GPT-4o represents a turning point worth exploring. The gap between what we can imagine and what we can create has never been smaller, and the barrier to entry has never been lower.

Whether you’re a designer looking to streamline your workflow, a business owner seeking to create professional-quality visual content, or simply someone curious about the creative possibilities of AI, GPT-4o’s image generation capabilities offer a glimpse of a future where visual creation is as accessible as conversation.

My Future Outlook:

- We can expect continued improvements in generation speed as OpenAI optimises the model

- Future iterations will likely offer even more sophisticated editing capabilities and creative control

- Integration with other creative tools and workflows will expand the practical applications of AI image generation

- As technical barriers continue to fall, adoption will increase across various industries and applications

- The boundary between human and artificial creativity will become increasingly blurred, opening new possibilities for collaboration

- The democratisation of visual creation will have far-reaching implications for industries that have traditionally relied on specialised skills and expensive software

For further reading on the the future of AI, future of SEO or the role of AIO or LLM SEO, check out our guides below.

AI Means The Death of SEO? Not So Fast – Let’s Look At How AI is Shaping The Future of SEO

From SEO to GEO: The Ultimate AI SEO Guide (AI Mode, AI Overviews, RAG, AIO, AEO & LLM SEO)

If you like what you’ve read or have any experiences of your own to share, please drop us a comment below or join us on social media.

0 Comments