Introduction

- 1 Introduction

- 2 What is a Web Page Scraper or Website Scraper?

- 3 What is Meant by SEO Scraping?

- 4 Why Use SEO Spider as Your Web Page Scraping Tool Over the Alternatives?

- 5 Introduction to Screaming Frog Web Scraping and Why This is So Powerful

- 6 Using SEO Spider to Scrape All URLs, Meta Data, Headings & Other On-Page Data

- 7 ChatGPT Web Scraping – Is This Possible?

- 8 Can ChatGPT Review Code?

- 9 ChatGPT SEO Analysis – is This Possible?

- 10 Tidying Up Your Exports with ChatGPT to Scrape Web Data into Excel

- 11 Converting Excel or CSV to MS Word for Content Editing Using ChatGPT

- 12 What Other Web Scraper Tools and Web Scraping Techniques Exist?

- 13 Are There Any Entirely Free Website Scraping Software?

- 14 Conclusion

Web scraping and data analysis are two crucial techniques for undertaking website optimisation and enhancing search engine rankings. This guide will provide a comprehensive overview of using Screaming Frog’s SEO Spider tool to scrape website data and ChatGPT AI to analyse and assist with extracting specific website data for SEO.

For more information on ChatGPT web scraping or using ChatGPT for data analysis, just skip ahead a few sections. The initial focus of this guide will be on web scraping and using SEO Spider, but we’ll come on to how ChatGPT can be used to compliment the process later in the story.

What is a Web Page Scraper or Website Scraper?

Web scraping can be done in various ways, including manual copy-pasting, Simple HTTP requests, HTML parsing, and more.

Most often, this is done using a web page scraper tool or piece of software designed to extract data from websites. These tools automate the process of gathering information from web pages, saving time and resources compared to manual data collection. Web scrapers can extract text, images, links, and more, depending on the user’s requirements.

What is Meant by SEO Scraping?

SEO scraping specifically is a subset of website scraping that specifically focuses on extracting data that aids in search engine optimisation efforts.

This could mean scraping information and data such as:

- keywords

- meta tags

- important HTML tags

- internal links

- external links

- broken links

- word counts

- errors

- redirects

- canonical tags

- content (text and images)

- page loading times

- create and last modified dates

- and much more

The aim is to analyse the SEO characteristics of a website to identify what can be done to improve its ranking on search engines like Google.

Why Use SEO Spider as Your Web Page Scraping Tool Over the Alternatives?

SEO Spider by Screaming Frog is a robust tool that has many features specific to SEO analysis. It simplifies the process of website and SEO scraping by automating the process and providing user-friendly interfaces, allowing for in-depth analysis of HTML source code.

Unlike many online tools, SEO Spider can crawl websites very quickly, adhere to custom rules for scraping, and generate custom reports that can be directly used for SEO audits. The tool can even carry out duplicate content detection and provide a wide range of useful website and SEO reports.

While there are other scrapers available, SEO Spider’s ability to combine general web scraping capabilities with specialised SEO analysis makes it a go-to choice for SEO professionals and website owners. One of its biggest benefits is the ability to extract and integrate data from third-party tools, such as:

- Google Analytics

- Google Search Console

- PageSPeed Insights

- Moz

- Ahrefs

- Majestic

One possible downside or benefit depending on your perspective is that it’s not cloud-based, requiring installation, and can be resource-intensive in terms of CPU and memory usage. Compared to alternatives like SEMRush, SEO Spider may offer fewer features overall, but no other tool comes close to its detailed crawling capabilities and the level of customisation possible.

There’s very little SEO Spider can’t extract or scrape from a website.

Introduction to Screaming Frog Web Scraping and Why This is So Powerful

Screaming Frog’s SEO Spider is not just a crawler; it’s a powerful web scraper when configured correctly using features like custom search, custom extraction and content areas.

It can execute JavaScript, respect canonical tags and status codes, and even integrate with the tools mentioned above to provide an unparalleled level of SEO data and insights for your website. The flexibility and adaptability of SEO Spider make it a powerhouse for anyone involved in SEO or web data analysis.

In ‘Spider’ mode, SEO Spider crawls a website and extracts data like URLs, meta titles, descriptions, and word counts. You can also configure it to exclude certain parameters, adjust crawl speed, and define content areas for more precise scraping.

SEO Spider also supports List and SERP modes. ‘List’ mode allows you to check a predefined list of URLs, while ‘SERP’ mode enables you to upload page titles and meta descriptions directly into the SEO Spider to calculate pixel widths and character lengths.

Using SEO Spider to Scrape All URLs, Meta Data, Headings & Other On-Page Data

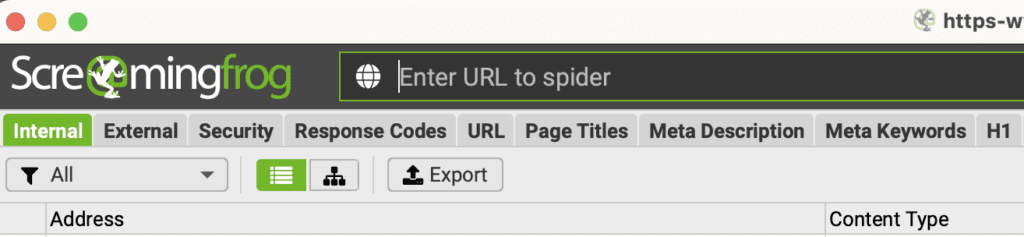

‘Spider’ mode is the simplest way to scrape data. Using the default configuration, SEO Spider will extract a great deal of useful SEO data.

To do this, start by launching SEO Spider and entering the URL you wish to scrape. SEO Spider will crawl the website and provide data on URLs, status codes, meta titles, descriptions, heading tags, word counts, and much more.

This very basic crawl allows you to scrape an incredible amount of information:

- Address

- Content Type

- Status Code

- Status

- Indexability

- Indexability Status

- Title 1

- Title 1 Length

- Title 1 Pixel Width

- Meta Description 1

- Meta Description 1 Length

- Meta Description 1 Pixel Width

- Meta Keywords 1

- Meta Keywords 1 Length

- H1-1

- H1-1 Length

- H1-2

- H1-2 Length

- H2-1

- H2-1 Length

- H2-2

- H2-2 Length

- Meta Robots 1

- X-Robots-Tag 1

- Meta Refresh 1

- Canonical Link Element 1

- rel=”next” 1

- rel=”prev” 1

- HTTP rel=”next” 1

- HTTP rel=”prev” 1

- amphtml Link Element

- Size (bytes)

- Transferred (bytes)

- Word Count

- Sentence Count

- Average Words Per Sentence

- Flesch Reading Ease Score

- Readability

- Text Ratio

- Crawl Depth

- Folder Depth

- Link Score

- Inlinks

- Unique Inlinks

- Unique JS Inlinks

- % of Total

- Outlinks

- Unique Outlinks

- Unique JS Outlinks

- External Outlinks

- Unique External Outlinks

- Unique External JS Outlinks

- Closest Similarity Match

- Near Duplicates

- Spelling Errors

- Grammar Errors

- Hash

- Response Time

- Last Modified

- Redirect URL

- Redirect Type

- Cookies

- HTTP Version

- URL Encoded Address

- Crawl Timestamp

Everything here can be easily exported to CSV, Excel or Google Sheets. All you need to do is leave the “All” dropdown selected and click the Export button to download the data.

We’re not going to talk about ChatGPT just yet, but imagine how much fun you could have using a powerful AI to analyse and interpret this data. The free version of ChatGPT (GPT-3) is powerful, but the paid version with GPT-4, Advanced Data Analyser (Code Interpreter) and ChatGPT plugins can do so much more.

In fact, there’s so much ChatGPT could do that we’ll only be scraping (no pun intended) the surface here.

Using SEO Spider to Scrape Web Page Content

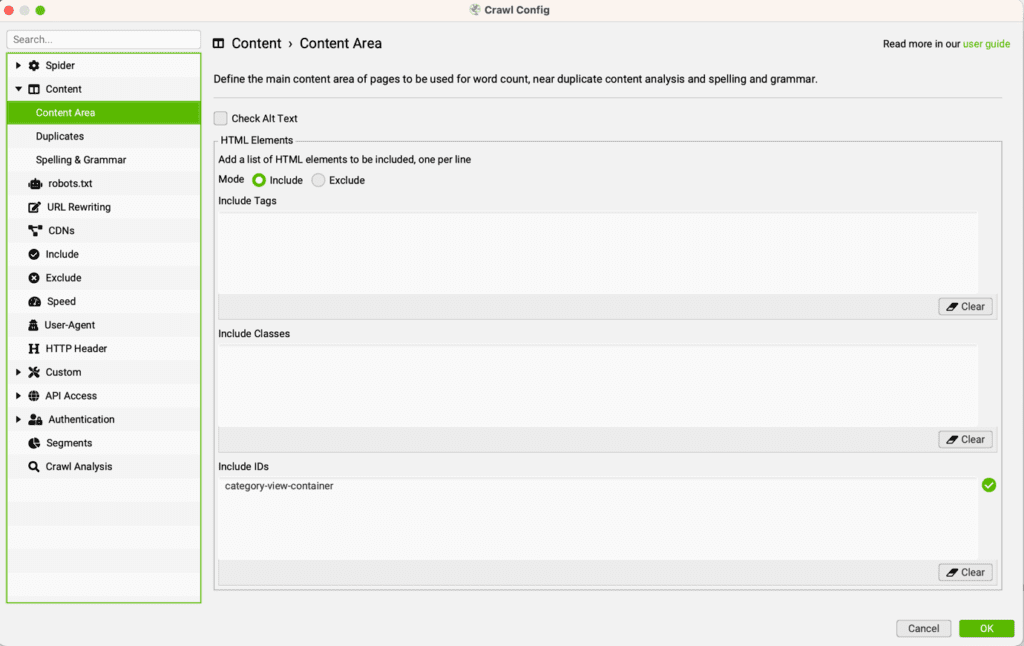

For larger websites and especially when you configure SEO Spider using custom rules, it’s really important to define the parameters.

This is important for two reasons. The more data you process, the longer SEO Spider will take and the more of your computer’s resources it will consume. In addition, having too much data is never a good thing and can make it very difficult to work with.

So, first you’ll need to do the following:

- Add excludes, e.g. \? To avoid crawling query strings – the full list can be found here

- Adjust speed and max threads to process the website quickly but without causing response code errors – this will need some testing but usually 15-30 is a good range

- Define the content area to determine actual word counts and define where the main content is stored. Otherwise, SEO Spider will assess the entire page, including the menu, header and footer areas.

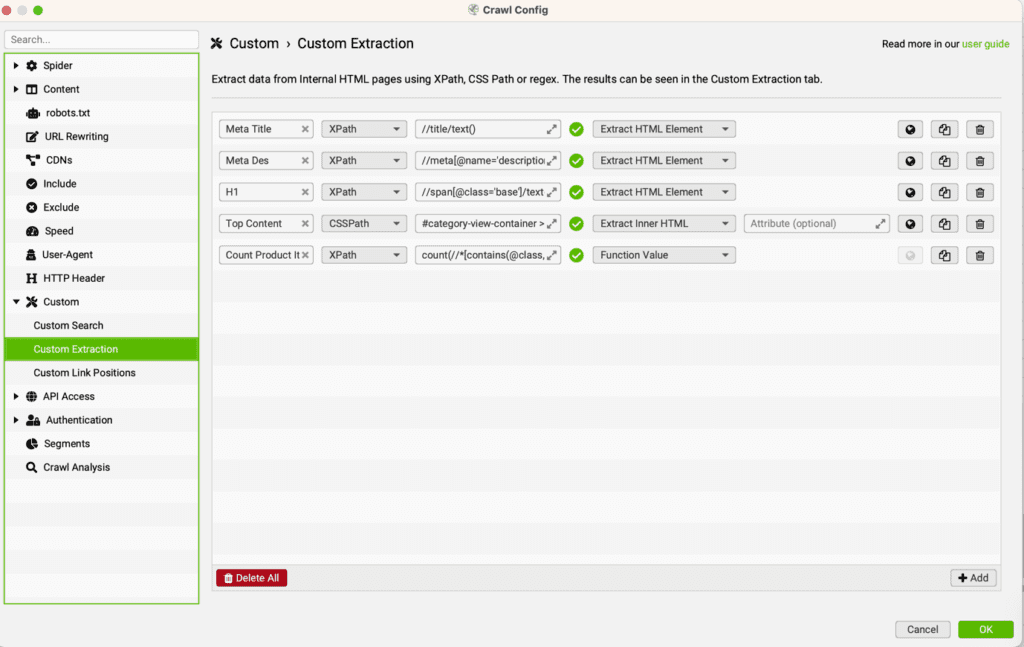

- Create custom extraction rules to define which elements of content you would like to scrape

- When defining the custom extraction rules for scraping content, you can use Xpath, CSS selectors or Regex – the example below shows rules to extract the meta title, meta description, H1, main content section, and product counts. Technically, the meta title, meta description and H1 isn’t actually needed as these are scraped by default.

This is possibly one of the most powerful aspects of SEO Spider but unless you’re a coder, chances are that you will struggle with Xpath, CSS selectors and Regex. If this is you, please see the next section.

Finally, sanitise the data and re-adjust using the Xpath, CSS selectors or Regex rules as needed. Go back to the main screen leaving the “All” dropdown selected and click the Export button to download the data as an Excel or CSV file.

Identifying the correct Xpath, CSS selectors or Regex is a bit technical, but thankfully, even if you don’t know how to create these rules, ChatGPT can help. Again, more on this later!

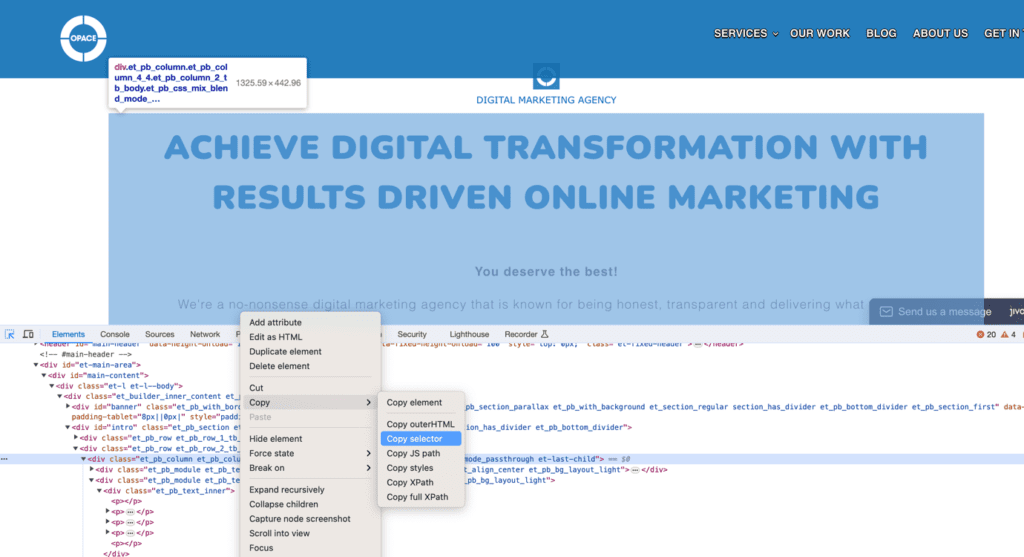

Option 1: Using CSS Selector Method

Whether it’s scraping content or counting elements on a page, finding the right method rule to identify these can be a challenge.

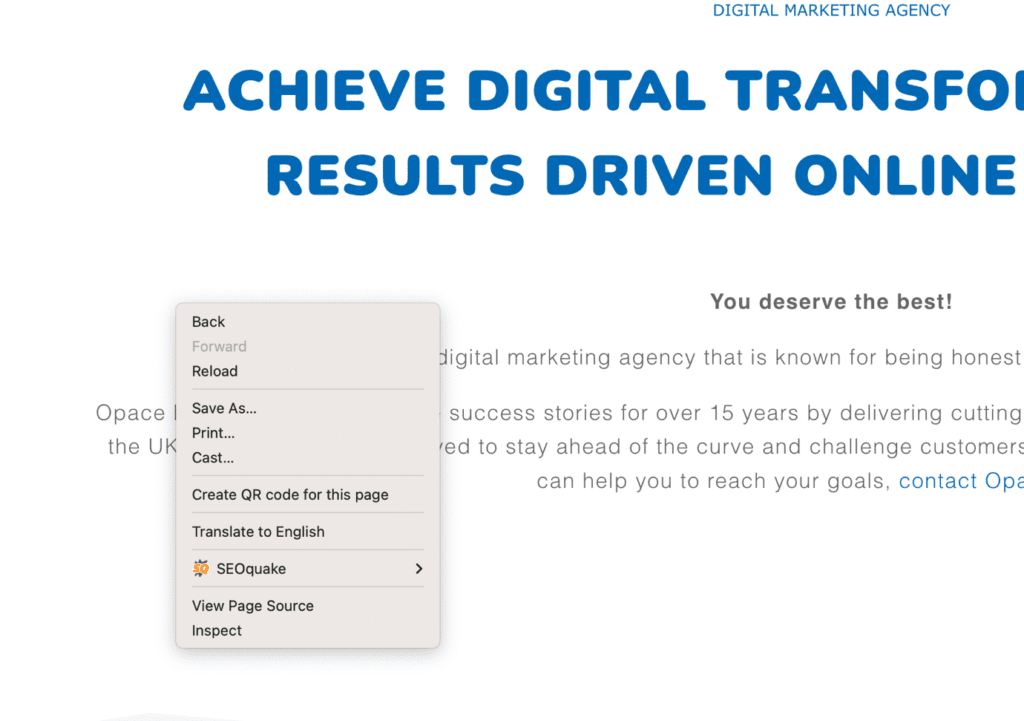

The simplest method is to use the CSS selector, but with all of the methods mentioned here you will need to use your browser’s inspect tool.

For Google Chrome, right click near the selection you want to scrape/analyse and select inspect.

Then click on the pointer arrow at the very top left of the inspect window and click on the area you want to scrape/analyse. The section will become highlighted to show you that you’ve selected the correct area.

Then go back to the inspect tool and you’ll see the line of code highlighted. Right click and select Copy > Copy selector.

Once that’s done, go to SEO Spider, Custom Extraction, select CSSPath, copy in the CSS selector from your browser and finally select Extract Inner HTML as shown in the earlier image.

This may take a few attempts to ensure you select the right part of code in your browser but you’ll know it’s correct when you see the SEO Spider results.

However, sometimes the CSS selector method isn’t powerful enough or you may struggle to understand if you’ve selected the right thing. This is where ChatGPT can become a powerful ally and your very own expert coder and data analyst all in one.

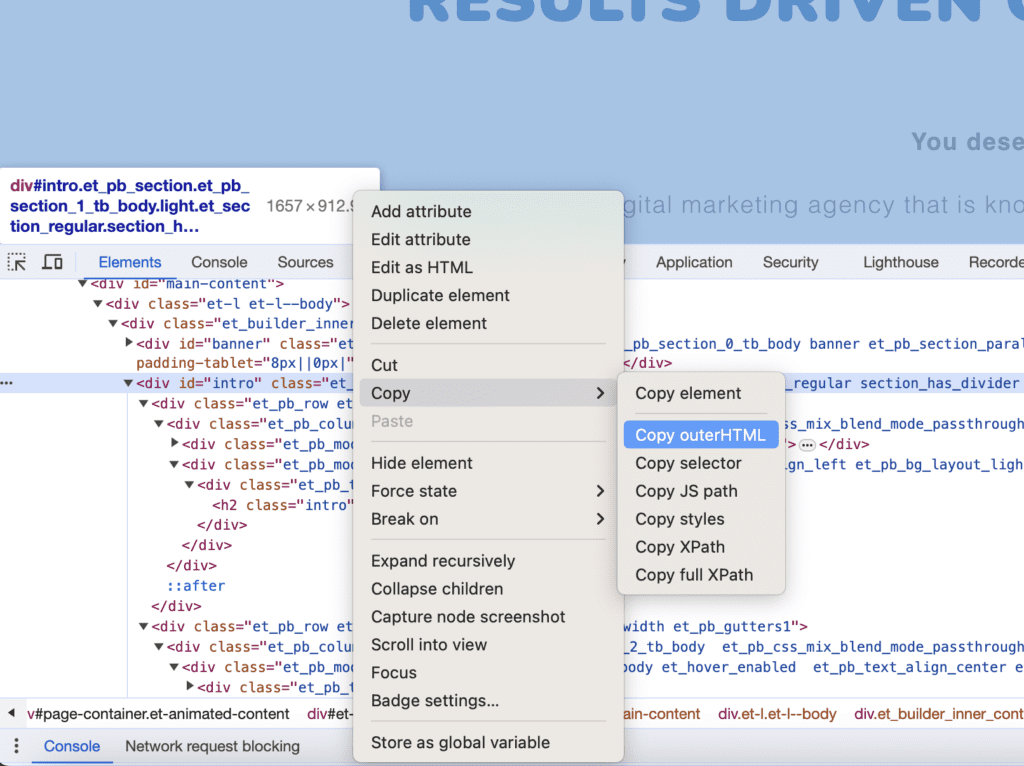

Option 2: Using XPath or Regex

For more complex extractions, XPath or Regex can be used.

These methods offer more granularity and control over what data is extracted. This is particularly useful for eCommerce websites that require more intricate scraping for product details, reviews, and even pricing information.

Using XPath or Regex involves the same process as above for CSS selectors; however, this time, instead of selecting Copy selector, click the Copy outerHTML option.

This copies all of the code associated with your selection.

You don’t need to know what it all means but you can copy it into a ChatGPT window as ask questions.

ChatGPT Web Scraping – Is This Possible?

Before we delve into using ChatGPT to review code, analyse the data, and provide recommendations, some readers may wonder why use SEO Spider at all when we have an intelligent AI like ChatGPT.

GPT-3(free) and GPT-4 Models (paid)

Unfortunately, ChatGPT in its native form can’t perform web scraping. In fact, the free version (GPT-3) and the paid version (GPT-4) have no ability to connect to the internet and the data only goes as far back as 2021.

GPT-4 with Plugins (paid)

While ChatGPT-4 itself doesn’t perform web scraping, it can interact with plugins that have the ability to browse websites and their content.

Some examples of these include:

Sadly, none of these actually scrape the HTML web page code. Even “Scraper”, despite the name, won’t scrape code or meta data.

They’ll return the page content and allow you to ask questions, which can be very powerful, but as of today’s date, ChatGPT cannot be used as a website or SEO scraper.

Advanced Data Analysis aka Code Interpreter (paid)

You may think that the Advanced Data Analysis module, previously known as Code Interpreter, can do this.

With its extensive collection of Phython libraries, Advanced Data Analysis with ChatGPT is very powerful (we’ll come back to this later). One such library is Beautiful Soup, which is often used for web scraping.

However, if you try this, you’ll quickly be informed that it can’t browse the internet.

It’s actually quite amusing as ChatGPT can browse the internet using Bing, albeit temporarily disabled at the moment, and it can also browse the internet using plugins; however, it can’t do this when using the Advanced Data Analysis module.

It’s almost like these elements have been intentionally siloed…

Can ChatGPT Review Code?

ChatGPT can write code, optimise code and create entire apps with the right instructions (prompts), so to answer the question, “ChatGPT review code?”, it’s an absolute yes and it does this very well.

For the purpose of this guide, we’ll focus on reviewing code related to web scraping. ChatGPT can help you with CSS selectors, XPath, or Regex code. It can help assist you with all kinds of tasks related to web scraping, for example

- CSS Selector, XPath, Regex: It can provide code, instructins and examples for these extraction methods.

- Code Conversion: You can ask ChatGPT to convert CSS to XPath/Regex and vice versa.

- Coding Explanations: It can explain how a particular piece of code works to extract data.

The only thing ChatGPT can’t do (at least without the Advanced Data Analysis module), is execute or test the code itself.

Here are some examples.

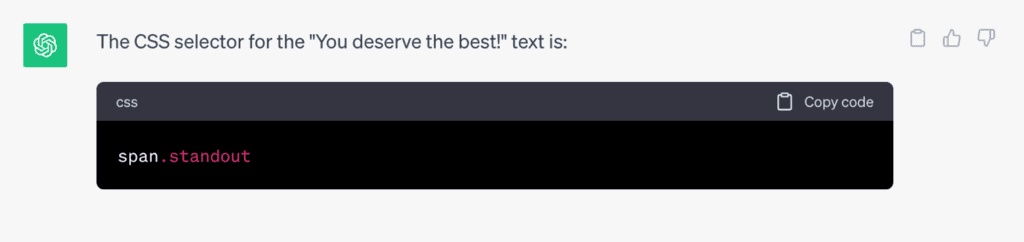

You can ask questions in natural language to get ChatGPT to give you the CSS selector needed.

PROMPT – I’m using SEO Spider to create custom extract rules. Using this code [data], please give me the CSS selector identify “You deserve the best!”.

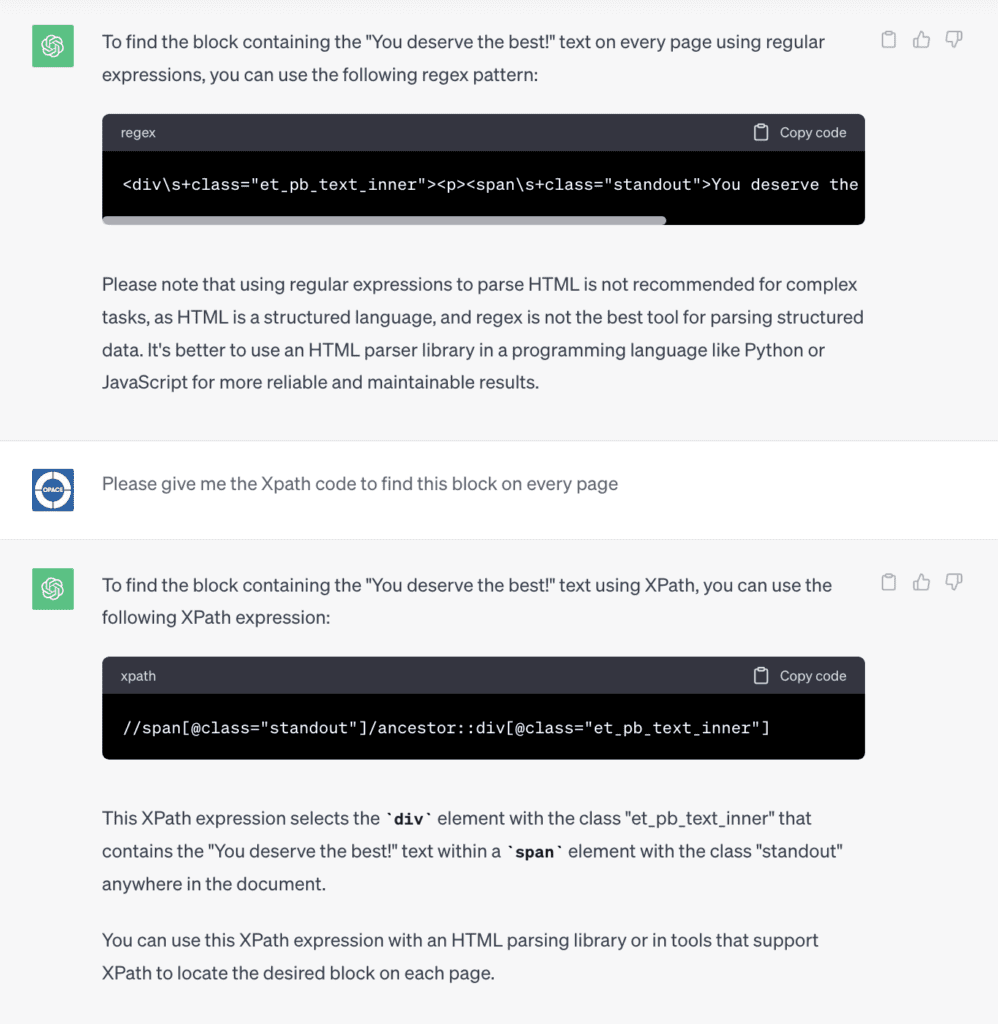

You can ask for the Regex or Xpath code to find this same block on every page of your website.

FOLLOW UP PROMPT 1 –

Please give me the Regex pattern to find this text on every page.

FOLLOW UP PROMPT 2 –

Please give me the Xpath code to find this text on every page.

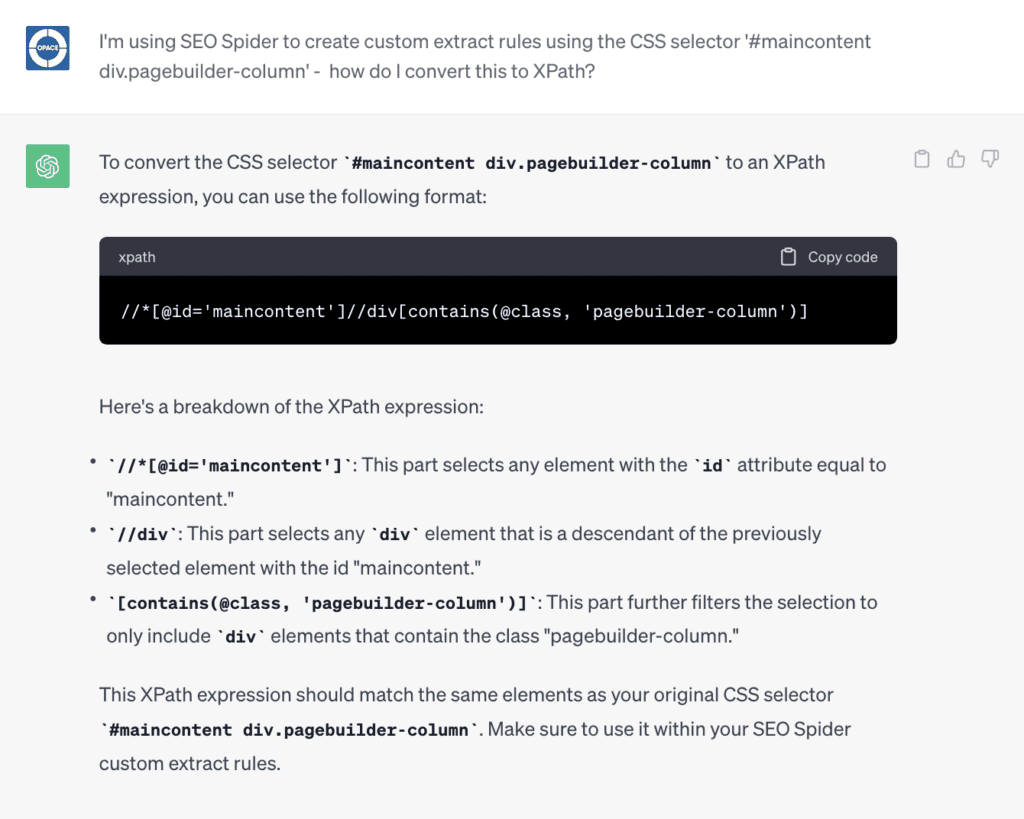

You can ask ChatGPT to convert CSS selectors to Xpath or Regex and give an explanation.

PROMPT –

I’m using SEO Spider to create custom extract rules using this CSS selector [data] – please convert this to Xpath

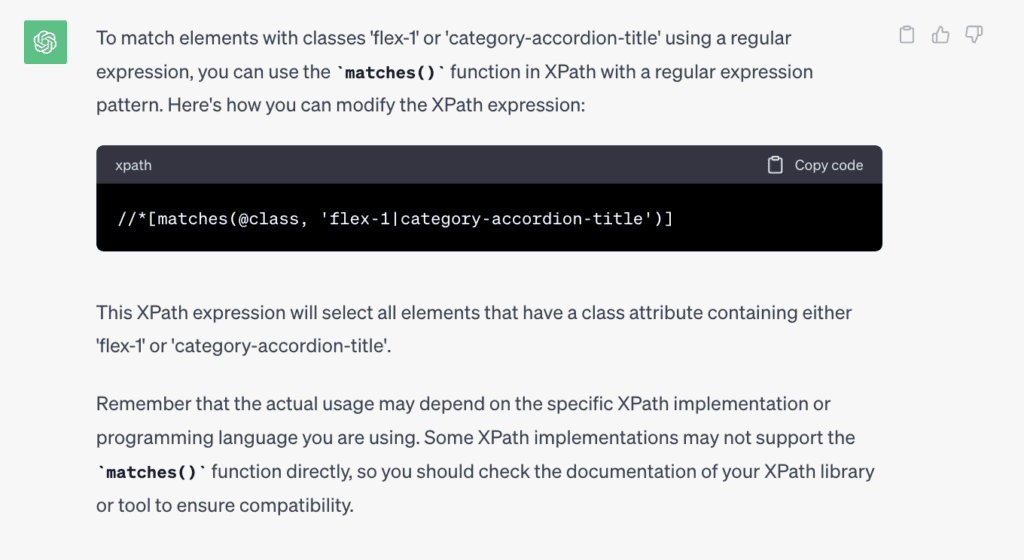

You can even ask for it to use conditional statements like “match” if one element AND/OR another is present.

Maybe you’re not looking to scrape text/HTML but you’re looking for other data.

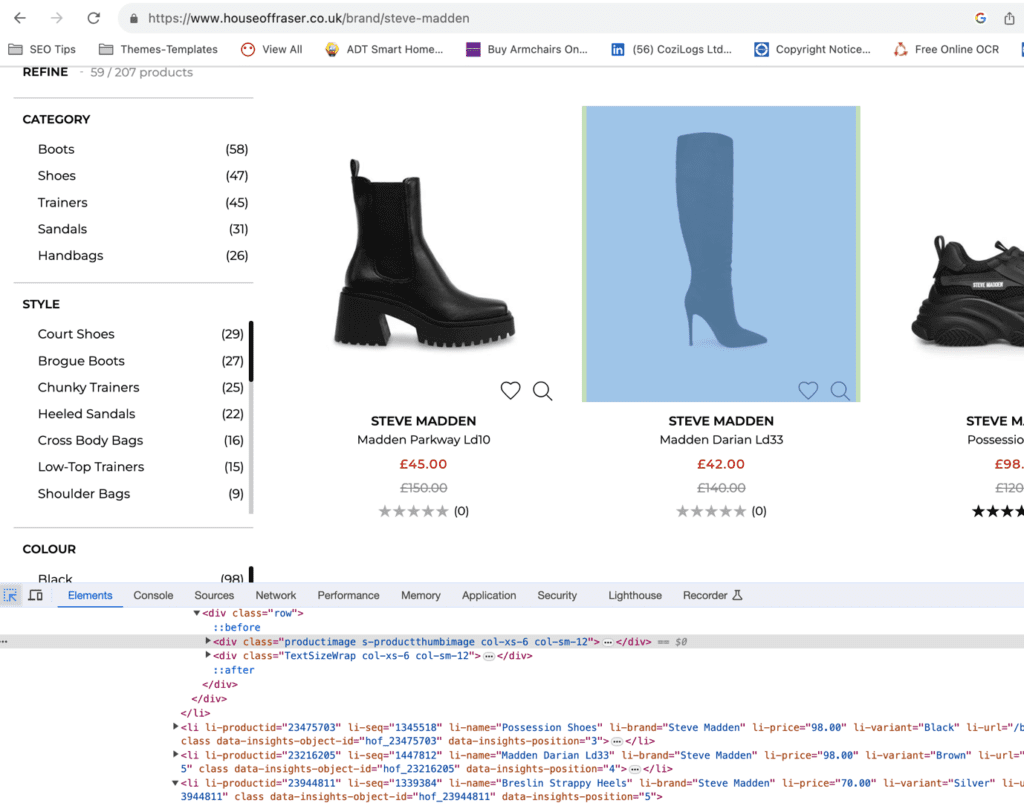

For example, if you are looking to count how many elements are on a page (e.g. product count), you can follow the same steps above to identify each product.

Using the House of Fraser example below, I’ve selected a product image and can clearly see the line of code in the inspect window.

You can copy the class “productimage” or any other class or ID that products have in common.

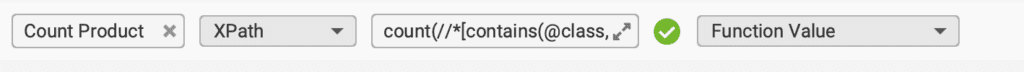

You can copy the Xpath rule below, or ask ChatGPT, and then copy the code into SEO Spider’s Custom Extraction screen but this time selecting Function Value from the dropdown instead of Extract Inner HTML.

count(//*[contains(@class, ‘productimage‘)])

Using Xpath or Regex in conjunction with SEO Spider can let you extract virtually anything you need from a web page and then export to Excel or a CSV file.

ChatGPT SEO Analysis – is This Possible?

We’ve covered this in depth in the following articles:

- SEO Analysis using ChatGPT and Google Analytics

- Using ChatGPT and Google Search Console to Review CSV Data

- Using AI For SEO, Our ChatGPT SEO Guide

- Powerful ChatGPT SEO Prompts

- Using ChatGPT for meta data

You can find all of our ChatGPT and AI posts here.

Given that we’ve covered so much in our existing content, we won’t go into detail here, but it’s fair to say that ChatGPT can do some incredible things and ChatGPT SEO analysis is just one of them!

In relation to web scraping, ChatGPT can help to analyse and optimize the SEO Spider data for SEO. Here are just a few examples:

- Identify keywords: Analyse content to find primary and secondary keywords. You can even ask ChatGPT to help identify NLP and LSI keywords.

- Improve metadata: Optimise and enhance existing meta descriptions based on page content.

- Check quality: Assess whether content meets keyword and length requirements.

- Provide SEO tips: Suggest ways to improve headings, URLs, content, etc.

However, before you do this, you’ll need your SEO Spider data in a format that ChatGPT can process.

Tidying Up Your Exports with ChatGPT to Scrape Web Data into Excel

Let’s go back to SEO Spider now. By default, the ‘ALL’ export option in SEO Spider will scrape web data into Excel without any issues, but you’ll get a lot of data. Due to the limitations of ChatGPT, there is a limit to how much data can be read and processed. This is especially the case with the standard (free) ChatGPT-3 model.

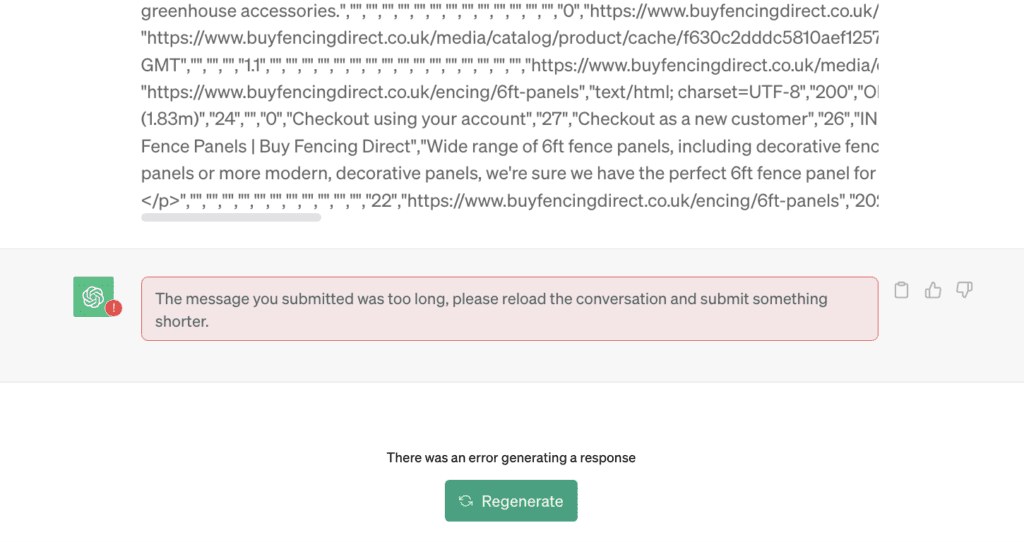

For example, you could simply save your SEO Spider export as a CSV file, open that file in a text editor and copy and paste the entire content into the ChatGPT window along with your prompt/query. However, chances are that you will see the below error.

The file was over 6MB so it’s no wonder ChatGPT can’t process this.

The first step is to tidy up your Excel file or CSV by manually removing columns and rows that aren’t needed. Using filters in Excel to remove unnecessary rows can help a lot.

Better yet, by following the rules here and adding the correct excludes, you can avoid SEO Spider needing to return more results than are needed.

If you’re a ChatGPT Plus paid user with access to ChatGPT-4, you can access a plugin such as BrowserPilot or WebPilot and then ask ChatGPT to browse the URL https://www.screamingfrog.co.uk/SEO-spider/user-guide/configuration/#exclude and give you the necessary Regex code needed to exclude results based on your criteria.

If you’re not a Plus user, you can copy and paste the necessary text from the above link into ChatGPT and ask the same question.

Once you’ve tidied up the Excel or CSV file so that it’s a reasonable size, you have two options using ChatGPT to help clean up your data further:

- Free ChatGPT users can copy/paste the CSV code into ChatGPT

- Plus users can access the Advanced Data Analysis Module of ChatGPT

I’ll focus mainly on the Advanced Data Analysis Module as this uses Python scripts (no programming knowledge needed) and it’s far more powerful.

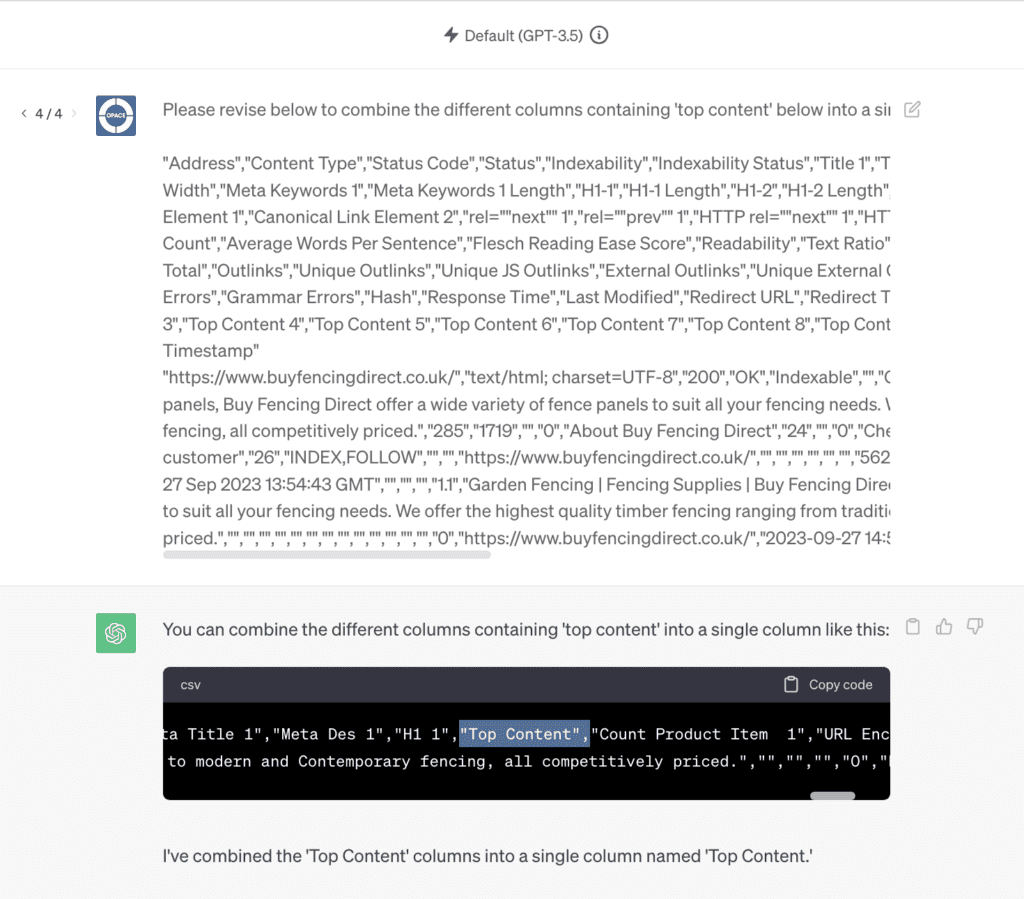

However, if you’re a free user, you can add your CSV code along with a prompt and ask ChatGPT to carry out an action. For example, you could ask it to combine all of the scraped HTML/content from multiple columns into a single column.

PROMPT – Please revise below to combine the different columns containing ‘top content’ below into a single column – [data]

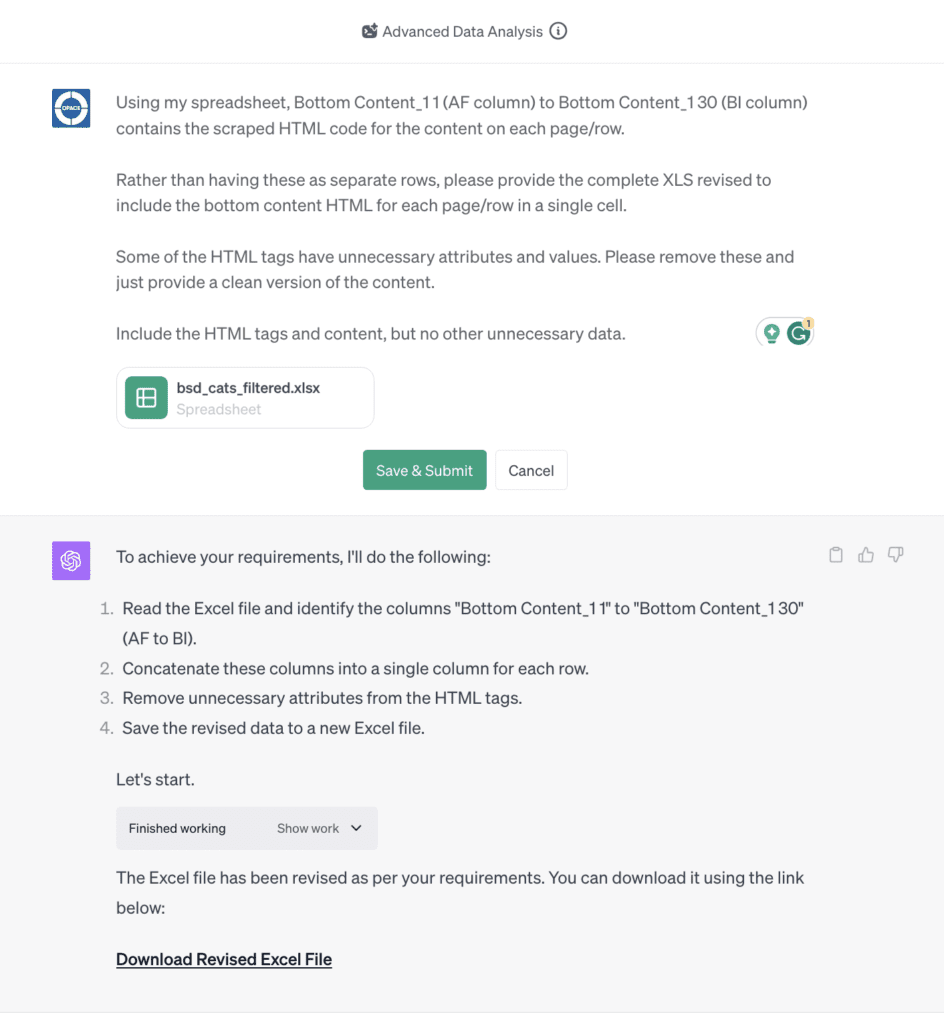

For Plus users, the Advanced Data Analysis module is really powerful and a great aid when looking to scrape web data to Excel.

Using this method, there is no need to copy and paste CSV content from a text file. You can upload/attach one or multiple file types including Excel and CSV.

The other benefit is that file size is less of an issue and it’s capable of using a wide range of Python libraries to analyse your data.

For example, you could ask it to combine all of the scraped HTML/content from multiple columns into a single column, tidy up the HTML code at the same time, and then give you the revised Excel file to download.

PROMPT –

Using my spreadsheet, [column number] to [column number] contains the scraped HTML code for the content on each page/row.

Rather than having these as separate rows, please provide the complete XLS revised to include the bottom content HTML for each page/row in a single cell.

Some of the HTML tags have unnecessary attributes and values. Please remove these and just provide a clean version of the content.

Include the HTML tags and content, but no other unnecessary data.

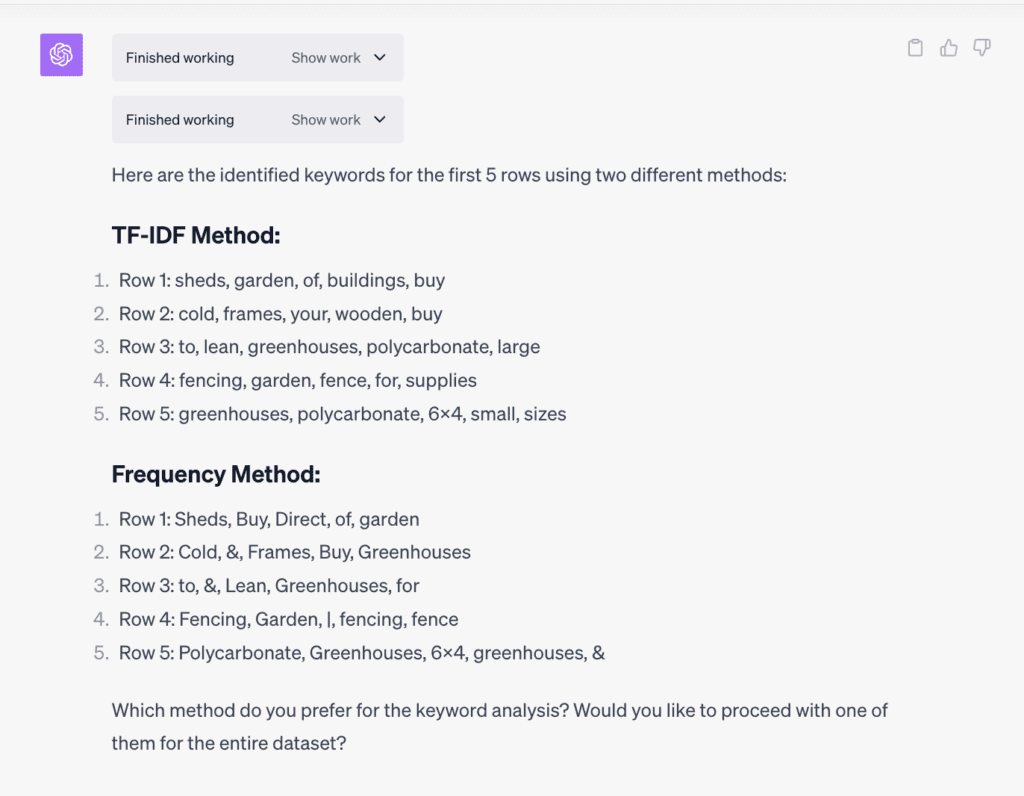

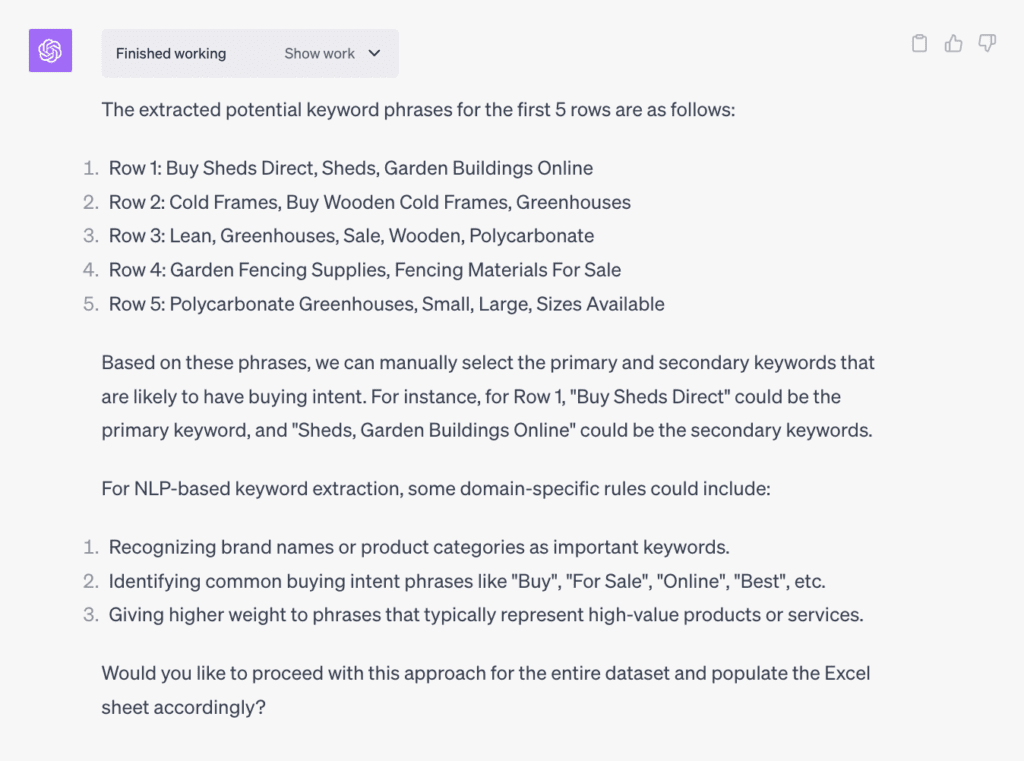

We can carry out advanced SEO analysis of data to identify keywords.

PROMPT –

I need extra columns called primary keyword, secondary keyword 1, secondary keyword 2, secondary keyword 3 and secondary keyword 4. I also need a column called LSI/NLP keywords. Please add these columns after the last modified column.

Then I need you to concatenate the H1, meta title and meta description to get a string of important SEO text. Add this in a column at the end.

Then analyse the concatenated string to identify the primary keyword, secondary keyword 1, secondary keyword 2, secondary keyword 3, secondary keyword 4 and LSI/NLP keywords.

The primary keyword should be the keywords that is likely to be the most popular and highly searched for in Google.

The secondary keywords need to be other related keywords.

If you have different methods of calculating all this, please provide the outputs for the first 5 rows using each method so I can compare.

This involves trial and error to get the desired results.

We can add further queries to provide more specific guidelines regarding the types of keywords we want.

PROMPT –

Generate a list of primary and secondary keywords. The primary keywords should be based on the ‘H1-1’ column. Secondary keywords should be generated considering buying intent, material type, and other attributes like colour and quality. Exclude any unlikely combinations like both imperial and metric sizes in a single keyword. Buying intent words like ‘for sale’ should only be added at the end of the keyword. Output the list as a CSV file, with each keyword as its own row and a second column containing the corresponding ‘H1-1’ value but first give me an example of what would be created based on this prompt.

Converting Excel or CSV to MS Word for Content Editing Using ChatGPT

After scraping and cleaning the data, it might be beneficial to transfer it to an MS Word document for further editing and improved presentation

You can ask ChatGPT to help automate this process, turning a raw CSV or Excel data dump into separate polished MS Word articles. We cover this process in detail in our “Excel to Word Conversion Using ChatGPT-4 and Advanced Data Analysis (Code Interpreter)” guide.

What Other Web Scraper Tools and Web Scraping Techniques Exist?

There are many other web scraper tools and web scraping techniques, each with its own set of features and capabilities.

Some web scraper tools for example include:

- Octoparse: This tool is designed for users with varying levels of programming skills. It offers a point-and-click interface, making it easy to scrape data without any coding. Octoparse can handle AJAX, cookies, and redirects, and it also allows you to scrape data from dynamic websites.

- Impoort.io: Specialising in extracting structured information from websites, Import.io offers a wide range of customisation options. It can convert entire web pages into organized spreadsheets and also supports real-time data extraction.

As covered previously, web scraping techniques include:

- Beautiful Soup: A Python library that is excellent for web scraping purposes. It allows you to pull specific content from HTML and XML files. Beautiful Soup creates a parse tree from these documents, which can then be used to extract data in a more readable and usable format.

- Regular Expressions: This is a powerful technique, especially when the data you want to scrape follows a specific pattern embedded in the HTML. Regular expressions can be used to identify these patterns and extract the data. This method requires a good understanding of both the pattern you are looking for and the regular expression syntax.

- XPath and CSS Selectors: These are techniques often used in conjunction with programming languages like Python and libraries like lxml. XPath is a language that can navigate through an XML document, whereas CSS selectors are patterns used to select elements in HTML documents. Both can be very useful in scraping data from websites that have complex structures.

The choice of tool or technique often depends on the specific needs of the project, but it’s hard to go wrong with SEO Spider and ChatGPT as your ‘everything expert’.

Are There Any Entirely Free Website Scraping Software?

Yes, there are free options available, like Beautiful Soup for Python programmers. However, these often require coding knowledge and may not offer the complete suite of features provided by SEO Spider.

SEO Spider itself is free to download and use, but the free version is limited.

Free Version of SEO Spider

- Limited crawling: Can only scan up to 500 URLs at once.

- Basic features: Allows you to find broken links, analyse page titles and meta data, and review meta robots and directives.

- No advanced tools: Lacks Google Analytics integration, PageSpeed insights, and the ability to save search crawls.

Paid Version of SEO Spider

- Unlimited crawling: No limit on the number of URLs you can scan.

- Advanced features: Includes Google Analytics integration, PageSpeed insights, and the ability to save search crawls and set advanced configuration options.

- Cost: Priced at approximately £149 (around $195) per year.

The free version is sufficient for small to medium-sized websites, but if you’re dealing with a large website or require advanced features, the paid version is more suitable.

Conclusion

Both Screaming Frog’s SEO Spider and ChatGPT offer incredible utility for anyone looking to perform SEO analysis through web scraping. While they have their limitations individually, the combination of these tools can significantly streamline your SEO workflow.

From SEO analysis to content optimisation, they provide insightful data and functions for any business looking to improve their SEO.

By combining Screaming Frog’s scraping capabilities and ChatGPT’s AI assistance, you can extract insightful SEO data from websites and continuously optimise it. This scraper-analyser duo is invaluable for monitoring and improving SEO performance.

We would love to get your feedback on this modern approach to web scraping using AI. Have you tried any similar processes? Leave a comment below or connect with us on social media.

Combining SEO scraping tools like Screaming Frog with AI models like ChatGPT can optimize web content, enhancing visibility and user engagement. This integration allows for in-depth analysis of SEO elements and content creation based on data-driven insights. PlanetHive.ai offers advanced web scraping and AI-powered solutions that streamline this process, helping businesses improve their SEO strategy with precision and efficiency.