Top SEO Tips for Using robots.txt and Mistakes to Avoid

Robots.txt files; all websites have them but not all webmasters make them work hard to power SEO.

An essential element of every website, the robots.txt file is critical in ensuring your site’s online visibility with good SEO. However, many webmasters overlook the importance of how this file is formatted and miss out on some powerful directives that can be added to improve a site’s speed as well as avoid duplicate content penalties.

What is robots.txt?

Also known as a Robots Exclusion Protocol, the robots.txt is a text file created by webmasters in order to exchange data with web robots such as crawlers. It is the primary way that search engines interact with a website in order to identify which areas of your website should be scanned and processed and, just as importantly, those elements which should not.

Why is robots.txt important for SEO?

This small piece of code is a part of all websites but is often overlooked when it comes to SEO which is a big mistake. The robots.txt file should be an important element of your SEO strategy and should be likened to a doorman when it comes to the kind of traffic you can attract, or avoid coming, to your website.

Your robots.txt file prevents search engines from gaining access to certain parts of your site whilst welcoming them to others; just like a doorman.

Attracting Traffic Using robots.txt

In order to be found via search engines, your site must have a robots.txt file. Without this important document, web crawlers cannot index your site and therefore cannot return relevant pages to users in a search query.

You can use the Google Search Console to test your robots.txt file and submit an updated version to ensure any changes are implemented.

Avoiding Traffic Using robots.txt

Firstly, why would you want to avoid traffic coming to your website? Surely, successful SEO is all about increasing your site’s visibility, right?

Wrong.

Successful SEO is all about increasing the quality of your visibility.

What do we mean by this?

Well, there is no such thing as an ‘average’ number of pages per site but for many organisations, their website may contain several hundred, if not thousands of, pages. If each of these pages is set up in the robots.txt file to be indexed then this can seriously affect the response time and crawl budget of search engine crawlers like Googlebot.

As Google points out, you may choose to hide certain webpages from them that are unimportant or similar. This can occur when you have duplicate content on your site that is purposeful, such as printer-friendly versions of a page.

The second benefit of this action will help you avoid SEO penalties for duplicate content

You can also use the robots.txt file to prevent certain files from being indexed on search engines. This can include images and pdfs.

Lastly, by reducing the number of pages that are indexed you can speed up the loading speed of your website. This is particularly important for your performance on SERP as speed is an important factor in how sites are ranked in the results.

Common Mistakes to Avoid Using Robots.txt

You should never use this file as the primary way to hide web pages from Google search results. This is because backlinks that point to this part of your site can still be indexed. If you want to totally hide a paged then you should also incorporate a noindex directive or employ password protection. You should do these actions at the same time to ensure they are both effective. Never add a noindex after you have disallowed it in the robots.txt as, effectively, the web crawler will never see this second directive!

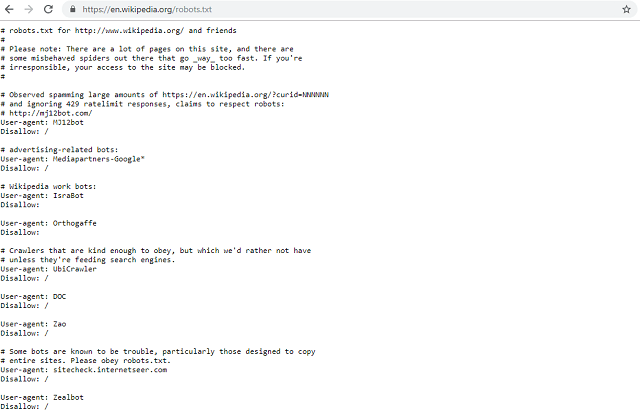

Your robots.txt file is a public document and may be viewed at the end of any root domain to identify a site’s directives. It is therefore essential that you do not embed any sensitive or private information within this file.

This robots.txt file from Wiki includes directives for search engines but also text which is intended for humans.

If you make significant changes to your robots.txt files then you should always resubmit this to the big search engines immediately. Whilst most perform updates at least once a day, effecting changes can be achieved more quickly.

Understanding the Limitations of robots.txt

It is important to remember that whilst the robots.txt file is a standard protocol used by many search engines it is only a requested directive and not an enforceable one. Whilst many of the larger search engines respect the instructions applied in this file, others do not and, if you are concerned over security and wish to maintain privacy for some pages then you will need to employ the use of other methods to block access.

Even if your robots.txt file indicates a page that is to be blocked by web crawlers this does not prevent it from being indexed by search engines if that page is linked to from another website. To avoid this from happening you should use a response header or include a noindex meta tag. If security is a concern then password protection should also be employed on these pages.

Lastly, the syntax of robots.txt is pretty standard for most crawlers but this can vary so it is important to ensure that you have correctly formatted the text of your directives for each crawler.

Get your website seen in search engine results by making sure the web crawlers can index you efficiently.

Opace and SEO

An accredited and results-drive digital agency, Opace is a specialist SEO consultancy offering a range of services from penalty removals and SEO audits to fully delivered digital marketing strategies.

To find out more about optimising your robots.txt file and other SEO tips to improve online visibility, contact us today.

Image Credits: Commons Wikipedia, Library of Congress, Wikipedia and Pixabay/mohamed_hassan

« Back to Glossary Index